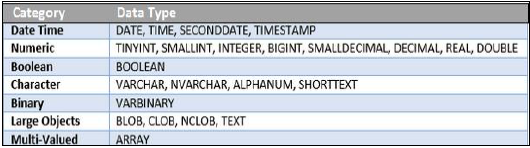

SAP HANA - Overview

SAP HANA is a combination of HANA Database, Data Modeling, HANA Administration and Data Provisioning in one single suite. In SAP HANA, HANA stands for High-Performance Analytic Appliance.

According to former SAP executive, Dr. Vishal Sikka, HANA stands for Hasso’s New Architecture. HANA developed interest by mid-2011 and various fortune 500 companies started considering it as an option to maintain Business Warehouse needs after that.

Features of SAP HANA

The main features of SAP HANA are given below −

SAP HANA is a combination of software and hardware innovation to process huge amount of real time data.

Based on multi core architecture in distributed system environment.

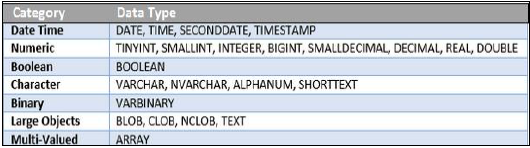

Based on row and column type of data-storage in database.

Used extensively in Memory Computing Engine (IMCE) to process and analyze massive amount of real time data.

It reduces cost of ownership, increases application performance, enables new applications to run on real time environment that were not possible before.

It is written in C++, supports and runs on only one Operating System Suse Linux Enterprise Server 11 SP1/2.

Need for SAP HANA

Today, most successful companies respond quickly to market changes and new opportunities. A key to this is the effective and efficient use of data and information by analyst and managers.

HANA overcomes the limitations mentioned below −

Due to increase in “Data Volume”, it is a challenge for the companies to provide access to real time data for analysis and business use.

It involves high maintenance cost for IT companies to store and maintain large data volumes.

Due to unavailability of real time data, analysis and processing results are delayed.

SAP HANA Vendors

SAP has partnered with leading IT hardware vendors like IBM, Dell, Cisco etc. and combined it with SAP licensed services and technology to sell SAP HANA platform.

There are, total, 11 vendors that manufacture HANA Appliances and provide onsite support for installation and configuration of HANA system.

Top few Vendors include −

- IBM

- Dell

- HP

- Cisco

- Fujitsu

- Lenovo (China)

- NEC

- Huawei

According to statistics provided by SAP, IBM is one of major vendor of SAP HANA hardware appliances and has a market share of 50-52% but according to another market survey conducted by HANA clients, IBM has a market hold up to 70%.

SAP HANA Installation

HANA Hardware vendors provide preconfigured appliances for hardware, Operating System and SAP software product.

Vendor finalizes the installation by an onsite setup and configuration of HANA components. This onsite visit includes deployment of HANA system in Data Center, Connectivity to Organization Network, SAP system ID adaption, updates from Solution Manager, SAP Router Connectivity, SSL Enablement and other system configuration.

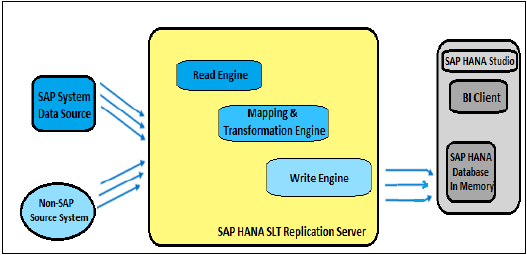

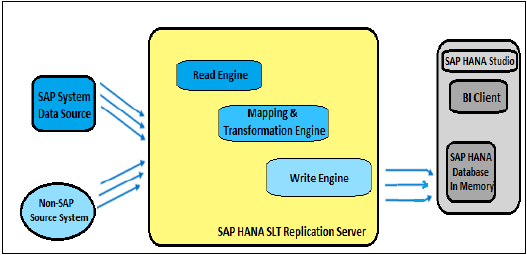

Customer/Client starts with connectivity of Data Source system and BI clients. HANA Studio Installation is completed on local system and HANA system is added to perform Data modeling and administration.

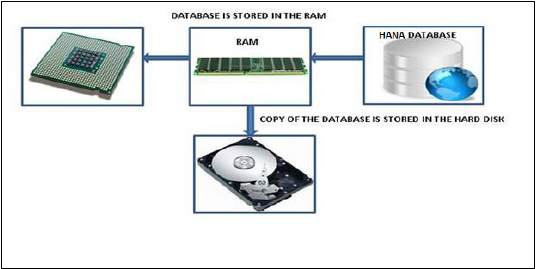

SAP HANA - In-Memory Computing Engine

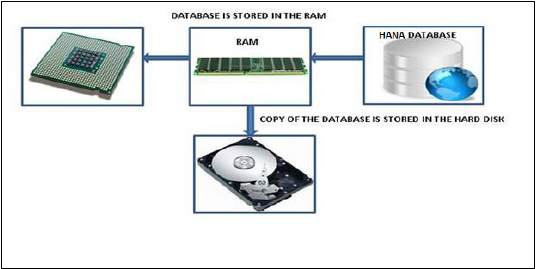

An In-Memory database means all the data from source system is stored in a RAM memory. In a conventional Database system, all data is stored in hard disk. SAP HANA In-Memory Database wastes no time in loading the data from hard disk to RAM. It provides faster access of data to multicore CPUs for information processing and analysis.

Features of In-Memory Database

The main features of SAP HANA in-memory database are −

SAP HANA is Hybrid In-memory database.

It combines row based, column based and Object Oriented base technology.

It uses parallel processing with multicore CPU Architecture.

Conventional Database reads memory data in 5 milliseconds. SAP HANA In-Memory database reads data in 5 nanoseconds.

It means, memory reads in HANA database are 1 million times faster than a conventional database hard disk memory reads.

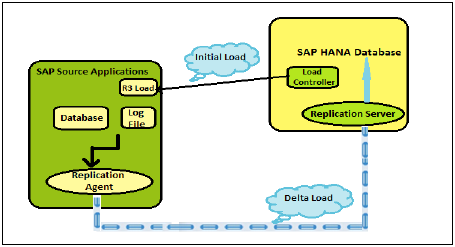

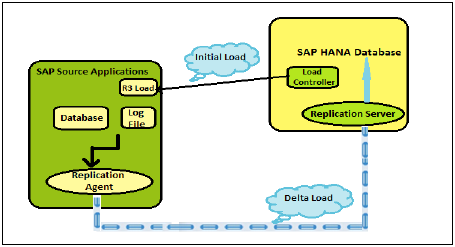

Analysts want to see current data immediately in real time and do not want to wait for data until it is loaded to SAP BW system. SAP HANA In-Memory processing allows loading of real time data with use of various data provisioning techniques.

Advantages of In-Memory Database

HANA database takes advantage of in-memory processing to deliver the fastest data-retrieval speeds, which is enticing to companies struggling with high-scale online transactions or timely forecasting and planning.

Disk-based storage is still the enterprise standard and price of RAM has been declining steadily, so memory-intensive architectures will eventually replace slow, mechanical spinning disks and will lower the cost of data storage.

In-Memory Column-based storage provides data compression up to 11 times, thus, reducing the storage space of huge data.

This speed advantages offered by RAM storage system are further enhanced by the use of multi-core CPUs, multiple CPUs per node and multiple nodes per server in a distributed environment.

SAP HANA - Studio

SAP HANA studio is an Eclipse-based tool. SAP HANA studio is both, the central development environment and the main administration tool for HANA system. Additional features are −

It is a client tool, which can be used to access local or remote HANA system.

It provides an environment for HANA Administration, HANA Information Modeling and Data Provisioning in HANA database.

SAP HANA Studio can be used on following platforms −

Microsoft Windows 32 and 64 bit versions of: Windows XP, Windows Vista, Windows 7

SUSE Linux Enterprise Server SLES11: x86 64 bit

Mac OS, HANA studio client is not available

Depending on HANA Studio installation, not all features may be available. At the time of Studio installation, specify the features you want to install as per the role. To work on most recent version of HANA studio, Software Life Cycle Manager can be used for client update.

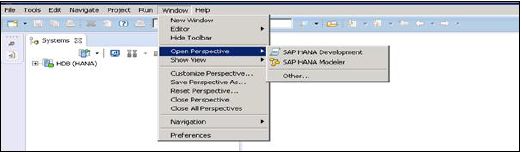

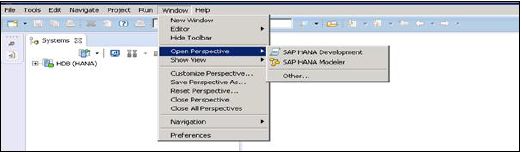

SAP HANA Studio Perspectives / Features

SAP HANA Studio provides perspectives to work on the following HANA features. You can choose Perspective in HANA Studio from the following option −

HANA Studio → Window → Open Perspective → Other

Sap Hana Studio Administration

Toolset for various administration tasks, excluding transportable design-time repository objects. General troubleshooting tools like tracing, the catalog browser and SQL Console are also included.

SAP HANA Studio Database Development

It provides Toolset for content development. It addresses, in particular, the DataMarts and ABAP on SAP HANA scenarios, which do not include SAP HANA native application development (XS).

SAP HANA Studio Application Development

SAP HANA system contains a small Web server, which can be used to host small applications. It provides Toolset for developing SAP HANA native applications like application code written in Java and HTML.

By default, all features are installed.

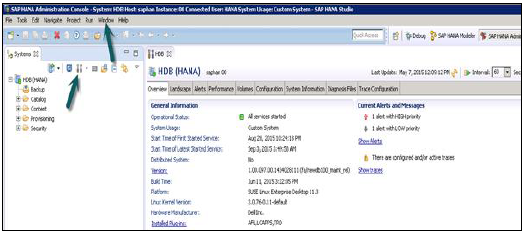

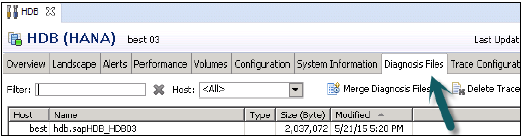

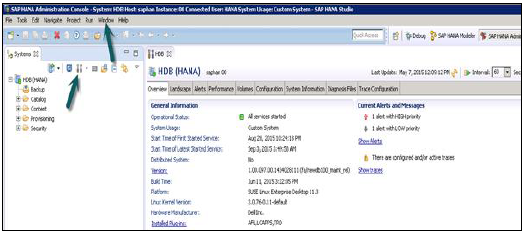

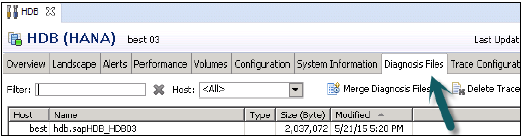

SAP HANA - Studio Administration View

To Perform HANA Database Administration and monitoring features, SAP HANA Administration Console Perspective can be used.

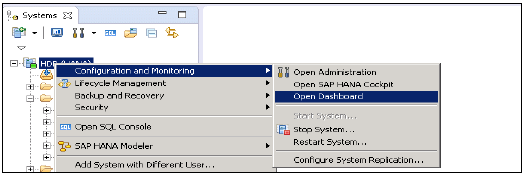

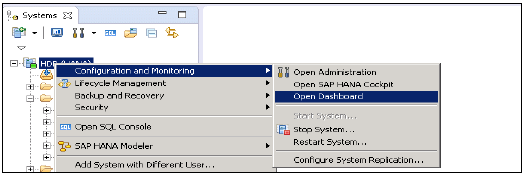

Administrator Editor can be accessed in several ways −

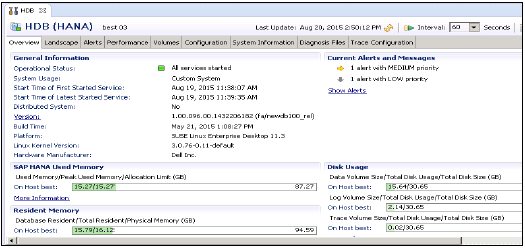

HANA Studio: Administrator Editor

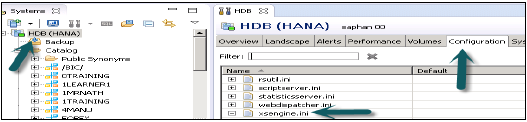

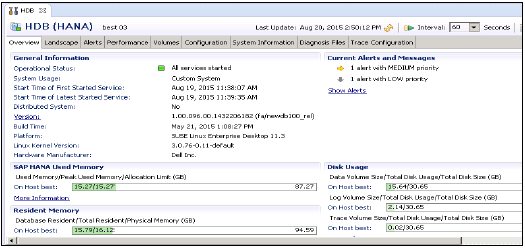

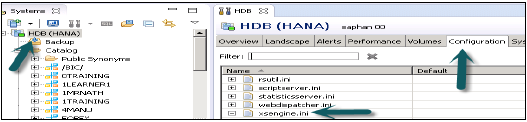

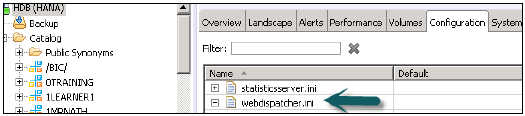

In Administration View: HANA studio provides multiple tabs to check configuration and health of the HANA system. Overview Tab tells General Information like, Operational Status, start time of first and last started service, version, build date and time, Platform, hardware manufacturer, etc.

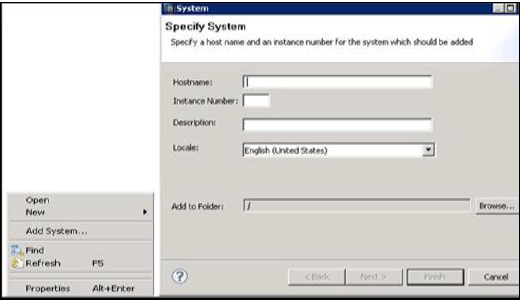

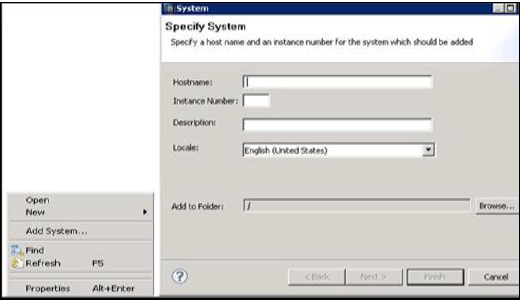

Adding a HANA System to Studio

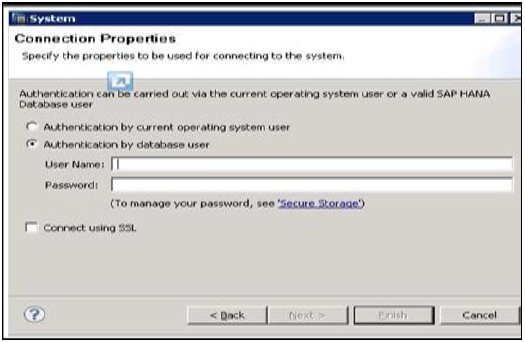

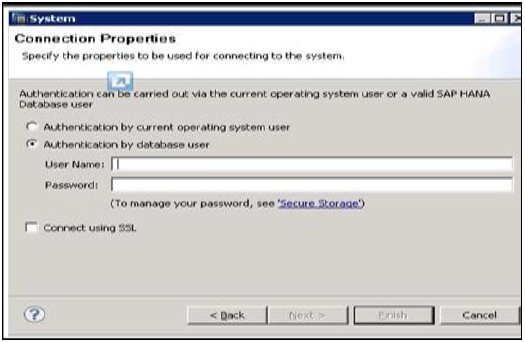

Single or multiple systems can be added to HANA studio for administration and information modeling purpose. To add new HANA system, host name, instance number and database user name and password is required.

- Port 3615 should be open to connect to Database

- Port 31015 Instance No 10

- Port 30015 Instance No 00

- SSh port should also be opened

Adding a System to Hana Studio

To add a system to HANA studio, follow the given steps.

Right Click in Navigator space and click on Add System. Enter HANA system details, i.e. Host name & Instance number and click next.

Enter Database user name and password to connect to SAP HANA database. Click on Next and then Finish.

Once you click on Finish, HANA system will be added to System View for administration and modeling purpose. Each HANA system has two main sub-nodes, Catalog and Content.

Catalog and Content

Catalog

It contains all available Schemas i.e. all data structures, tables and data, Column views, Procedures that can be used in Content tab.

Content

The Content tab contains design time repository, which holds all information of data models created with the HANA Modeler. These models are organized in Packages. The content node provides different views on same physical data.

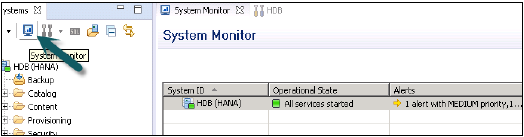

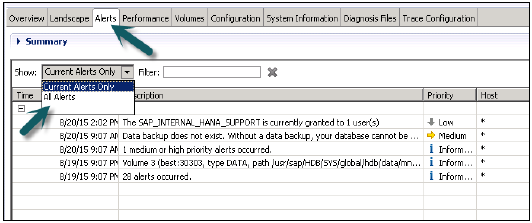

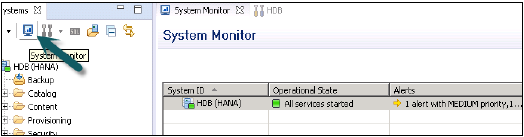

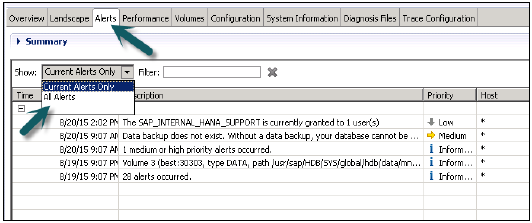

SAP HANA - System Monitor

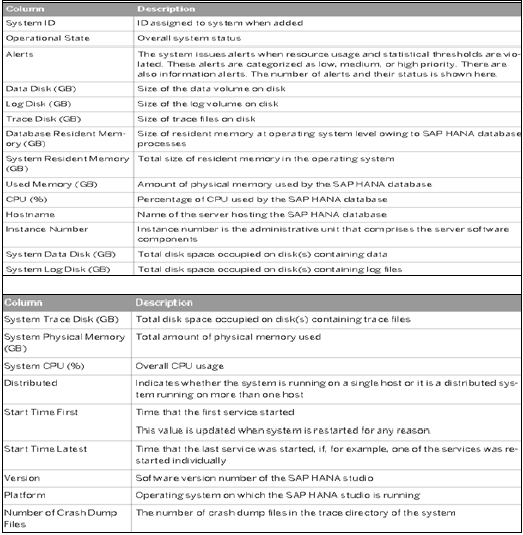

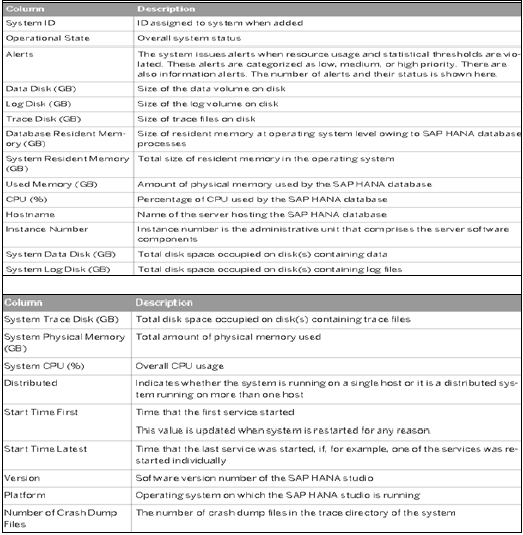

System Monitor in HANA studio provides an overview of all your HANA system at a glance. From System Monitor, you can drill down into details of an individual system in Administration Editor. It tells about Data Disk, Log disk, Trace Disk, Alerts on resource usage with priority.

The following Information is available in System Monitor −

SAP HANA - Information Modeler

SAP HANA Information Modeler; also known as HANA Data Modeler is heart of HANA System. It enables to create modeling views at the top of database tables and implement business logic to create a meaningful report for analysis.

Features of Information Modeler

Provides multiple views of transactional data stored in physical tables of HANA database for analysis and business logic purpose.

Informational modeler only works for column based storage tables.

Information Modeling Views are consumed by Java or HTML based applications or SAP tools like SAP Lumira or Analysis Office for reporting purpose.

Also possible to use third party tools like MS Excel to connect to HANA and create reports.

SAP HANA Modeling Views exploit real power of SAP HANA.

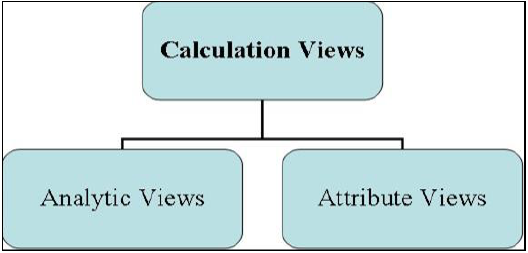

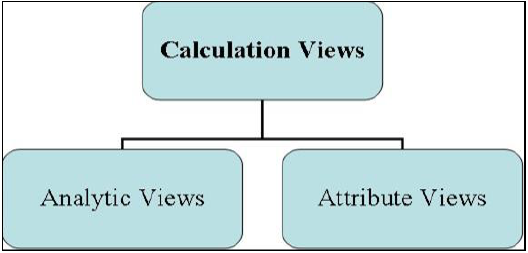

There are three types of Information Views, defined as −

- Attribute View

- Analytic View

- Calculation View

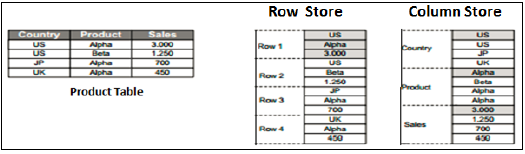

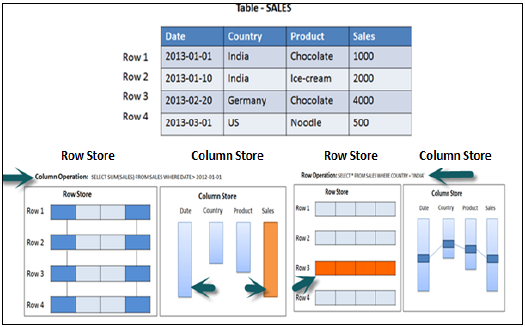

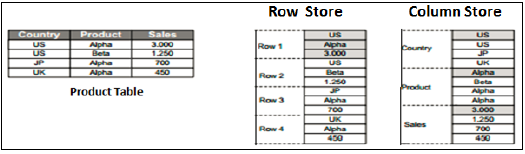

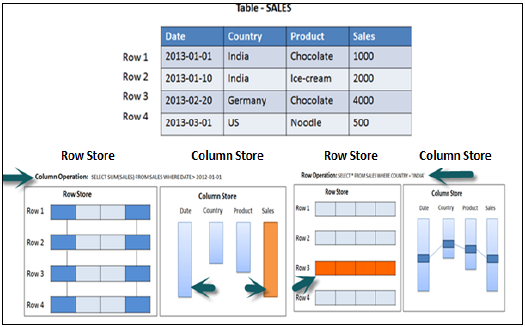

Row vs Column Store

SAP HANA Modeler Views can only be created on the top of Column based tables. Storing data in Column tables is not a new thing. Earlier it was assumed that storing data in Columnar based structure takes more memory size and not performance Optimized.

With evolution of SAP HANA, HANA used column based data storage in Information views and presented the real benefits of columnar tables over Row based tables.

Column Store

In a Column store table, Data is stored vertically. So, similar data types come together as shown in the example above. It provides faster memory read and write operations with help of In-Memory Computing Engine.

In a conventional database, data is stored in Row based structure i.e. horizontally. SAP HANA stores data in both row and Column based structure. This provides Performance optimization, flexibility and data compression in HANA database.

Storing Data in Columnar based table has following benefits −

Data Compression

Faster read and write access to tables as compared to conventional Row based storage

Flexibility & parallel processing

Perform Aggregations and Calculations at higher speed

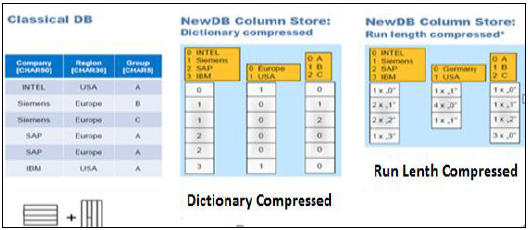

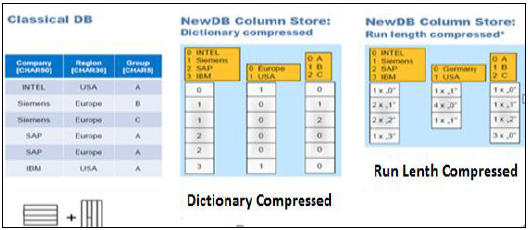

There are various methods and algorithms how data can be stored in Column based structure- Dictionary Compressed, Run Length Compressed and many more.

In Dictionary Compressed, cells are stored in form of numbers in tables and numeral cells are always performance optimized as compared to characters.

In Run length compressed, it saves the multiplier with cell value in numerical format and multiplier shows repetitive value in table.

Functional Difference - Row vs Column Store

It is always advisable to use Column based storage, if SQL statement has to perform aggregate functions and calculations. Column based tables always perform better when running aggregate functions like Sum, Count, Max, Min.

Row based storage is preferred when output has to return complete row. The example given below makes it easy to understand.

In the above example, while running an Aggregate function (Sum) in sales column with Where clause, it will only use Date and Sales column while running SQL query so if it is column based storage table then it will be performance optimized, faster as data is required only from two columns.

While running a simple Select query, full row has to be printed in output so it is advisable to store table as Row based in this scenario.

Information Modeling Views

Attribute View

Attributes are non-measurable elements in a database table. They represent master data and similar to characteristics of BW. Attribute Views are dimensions in a database or are used to join dimensions or other attribute views in modeling.

Important features are −

- Attribute views are used in Analytic and Calculation views.

- Attribute view represent master data.

- Used to filter size of dimension tables in Analytic and Calculation View.

Analytic View

Analytic Views use power of SAP HANA to perform calculations and aggregation functions on the tables in database. It has at least one fact table that has measures and primary keys of dimension tables and surrounded by dimension tables contain master data.

Important features are −

Analytic views are designed to perform Star schema queries.

Analytic views contain at least one fact table and multiple dimension tables with master data and perform calculations and aggregations

They are similar to Info Cubes and Info objects in SAP BW.

Analytic views can be created on top of Attribute views and Fact tables and performs calculations like number of unit sold, total price, etc.

Calculation Views

Calculation Views are used on top of Analytic and Attribute views to perform complex calculations, which are not possible with Analytic Views. Calculation view is a combination of base column tables, Attribute views and Analytic views to provide business logic.

Important features are −

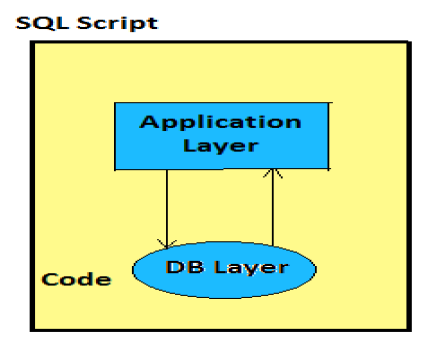

Calculation Views are defined either graphical using HANA Modeling feature or scripted in the SQL.

It is created to perform complex calculations, which are not possible with other views- Attribute and Analytic views of SAP HANA modeler.

One or more Attribute views and Analytic views are consumed with help of inbuilt functions like Projects, Union, Join, Rank in a Calculation View.

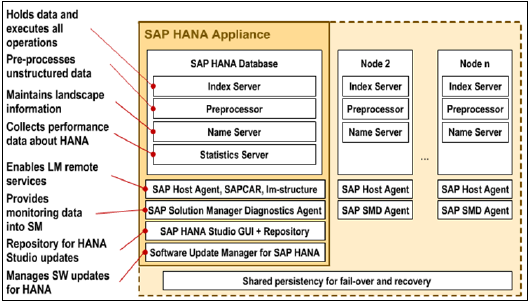

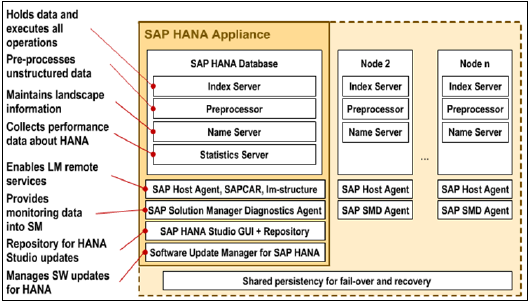

SAP HANA - Core Architecture

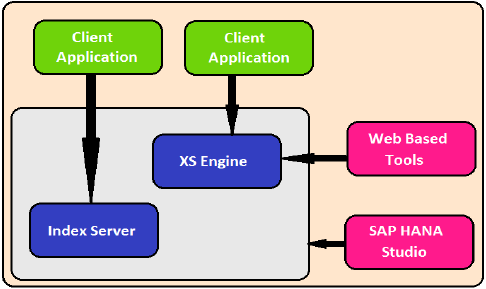

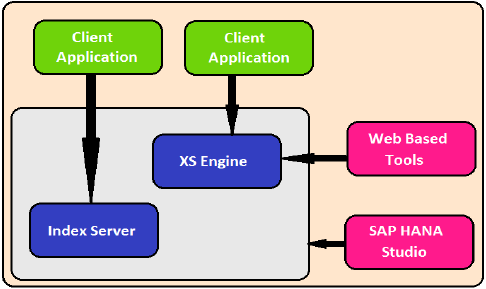

SAP HANA was initially, developed in Java and C++ and designed to run only Operating System Suse Linux Enterprise Server 11. SAP HANA system consists of multiple components that are responsible to emphasize computing power of HANA system.

Most important component of SAP HANA system is Index Server, which contains SQL/MDX processor to handle query statements for database.

HANA system contains Name Server, Preprocessor Server, Statistics Server and XS engine, which is used to communicate and host small web applications and various other components.

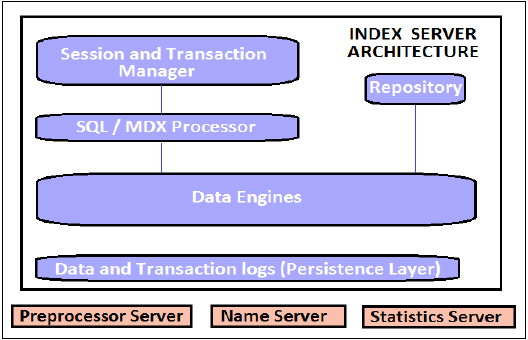

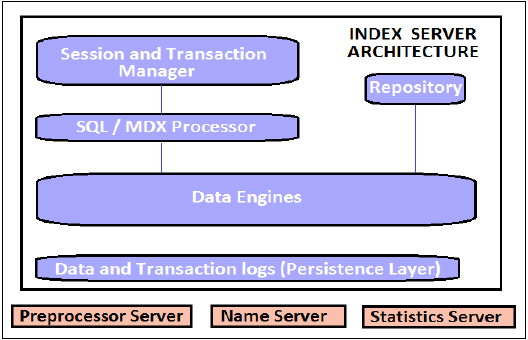

Index Server

Index Server is heart of SAP HANA database system. It contains actual data and engines for processing that data. When SQL or MDX is fired for SAP HANA system, an Index Server takes care of all these requests and processes them. All HANA processing takes place in Index Server.

Index Server contains Data engines to handle all SQL/MDX statements that come to HANA database system. It also has Persistence Layer that is responsible for durability of HANA system and ensures HANA system is restored to most recent state when there is restart of system failure.

Index Server also has Session and Transaction Manager, which manage transactions and keep track of all running and closed transactions.

Index Server − Architecture

SQL/MDX Processor

It is responsible for processing SQL/MDX transactions with data engines responsible to run queries. It segments all query requests and direct them to correct engine for the performance Optimization.

It also ensures that all SQL/MDX requests are authorized and also provide error handling for efficient processing of these statements. It contains several engines and processors for query execution −

MDX (Multi Dimension Expression) is query language for OLAP systems like SQL is used for Relational database. MDX Engine is responsible to handle queries and manipulates multidimensional data stored in OLAP cubes.

Planning Engine is responsible to run planning operations within SAP HANA database.

Calculation Engine converts data into Calculation models to create logical execution plan to support parallel processing of statements.

Stored Procedure processor executes procedure calls for optimized processing; it converts OLAP cubes to HANA optimized cubes.

Transaction and Session Management

It is responsible to coordinate all database transactions and keep track of all running and closed transactions.

When a transaction is executed or failed, Transaction manager notifies relevant data engine to take necessary actions.

Session management component is responsible to initialize and manage sessions and connections for SAP HANA system using predefined session parameters.

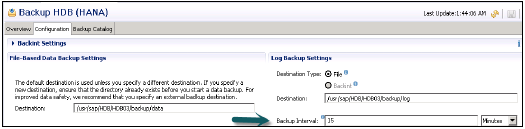

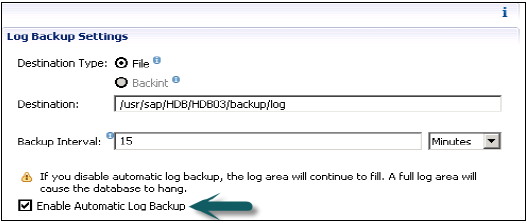

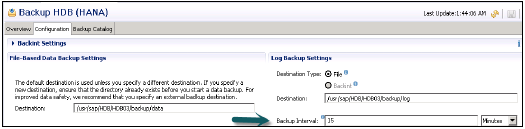

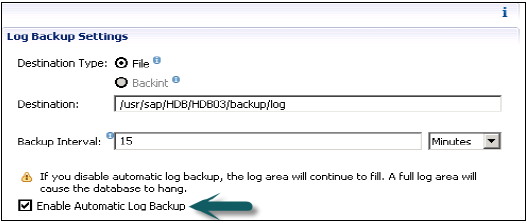

Persistence Layer

It is responsible for durability and atomicity of transactions in HANA system. Persistence layer provides built in disaster recovery system for HANA database.

It ensures database is restored to most recent state and ensures that all the transactions are completed or undone in case of a system failure or restart.

It is also responsible to manage data and transaction logs and also contain data backup, log backup and configuration back of HANA system. Backups are stored as save points in the Data Volumes via a Save Point coordinator, which is normally set to take back every 5-10 minutes.

Preprocessor Server

Preprocessor Server in SAP HANA system is used for text data analysis.

Index Server uses preprocessor server for analyzing text data and extracting the information from text data when text search capabilities are used.

Name Server

NAME server contains System Landscape information of HANA system. In distributed environment, there are multiple nodes with each node has multiple CPU’s, Name server holds topology of HANA system and has information about all the running components and information is spread on all the components.

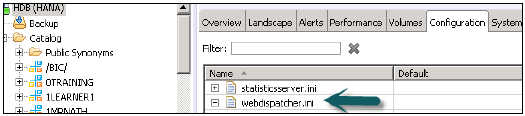

Statistical Server

This server checks and analyzes the health of all components in HANA system. Statistical Server is responsible for collecting the data related to system resources, their allocation and consumption of the resources and overall performance of HANA system.

It also provides historical data related to system performance for analyses purpose, to check and fix performance related issues in HANA system.

XS Engine

XS engine helps external Java and HTML based applications to access HANA system with help of XS client. As SAP HANA system contains a web server which can be used to host small JAVA/HTML based applications.

XS Engine transforms the persistence model stored in database into consumption model for clients exposed via HTTP/HTTPS.

SAP Host Agent

SAP Host agent should be installed on all the machines that are part of SAP HANA system Landscape. SAP Host agent is used by Software Update Manager SUM for installing automatic updates to all components of HANA system in distributed environment.

LM Structure

LM structure of SAP HANA system contains information about current installation details. This information is used by Software Update Manager to install automatic updates on HANA system components.

SAP Solution Manager (SAP SOLMAN) diagnostic Agent

This diagnostic agent provides all data to SAP Solution Manager to monitor SAP HANA system. This agent provides all the information about HANA database, which include database current state and general information.

It provides configuration details of HANA system when SAP SOLMAN is integrated with SAP HANA system.

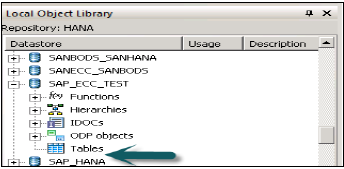

SAP HANA Studio Repository

SAP HANA studio repository helps HANA developers to update current version of HANA studio to latest versions. Studio Repository holds the code which does this update.

Software Update Manager for SAP HANA

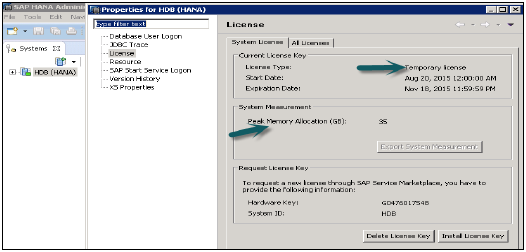

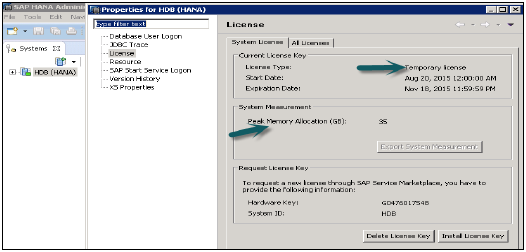

SAP Market Place is used to install updates for SAP systems. Software Update Manager for HANA system helps is update of HANA system from SAP Market place.

It is used for software downloads, customer messages, SAP Notes and requesting license keys for HANA system. It is also used to distribute HANA studio to end user’s systems.

SAP HANA - Modeling

SAP HANA Modeler option is used to create Information views on the top of schemas → tables in HANA database. These views are consumed by JAVA/HTML based applications or SAP Applications like SAP Lumira, Office Analysis or third party software like MS Excel for reporting purpose to meet business logic and to perform analysis and extract information.

HANA Modeling is done on the top of tables available in Catalog tab under Schema in HANA studio and all views are saved under Content table under Package.

You can create new Package under Content tab in HANA studio using right click on Content and New.

All Modeling Views created inside one package comes under the same package in HANA studio and categorized according to View Type.

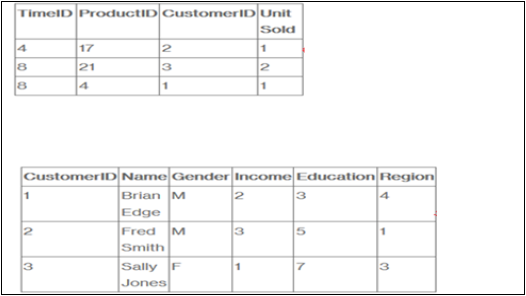

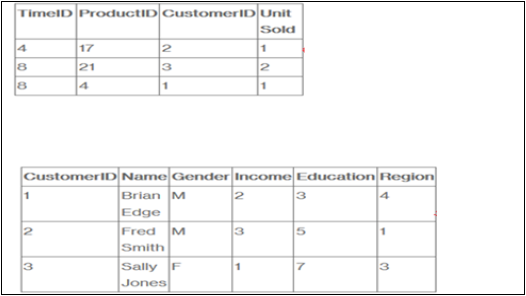

Each View has different structure for Dimension and Fact tables. Dim tables are defined with master data and Fact table has Primary Key for dimension tables and measures like Number of Unit sold, Average delay time, Total Price, etc.

Fact and Dimension Table

Fact Table contains Primary Keys for Dimension table and measures. They are joined with Dimension tables in HANA Views to meet business logic.

Example of Measures − Number of unit sold, Total Price, Average Delay time, etc.

Dimension Table contains master data and is joined with one or more fact tables to make some business logic. Dimension tables are used to create schemas with fact tables and can be normalized.

Example of Dimension Table − Customer, Product, etc.

Suppose a company sells products to customers. Every sale is a fact that happens within the company and the fact table is used to record these facts.

For example, row 3 in the fact table records the fact that customer 1 (Brian) bought one item on day 4. And, in a complete example, we would also have a product table and a time table so that we know what she bought and exactly when.

The fact table lists events that happen in our company (or at least the events that we want to analyze- No of Unit Sold, Margin, and Sales Revenue). The Dimension tables list the factors (Customer, Time, and Product) by which we want to analyze the data.

SAP HANA - Schema in Data Warehouse

Schemas are logical description of tables in Data Warehouse. Schemas are created by joining multiple fact and Dimension tables to meet some business logic.

Database uses relational model to store data. However, Data Warehouse use Schemas that join dimensions and fact tables to meet business logic. There are three types of Schemas used in a Data Warehouse −

- Star Schema

- Snowflakes Schema

- Galaxy Schema

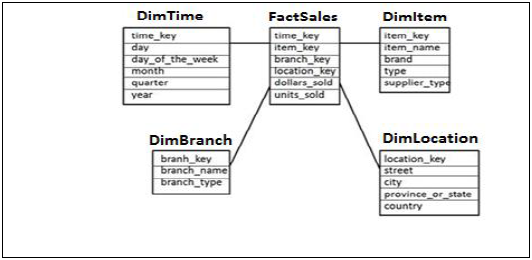

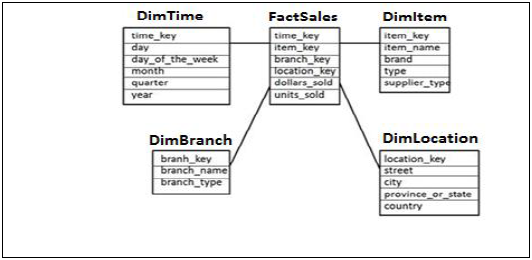

Star Schema

In Star Schema, Each Dimension is joined to one single Fact table. Each Dimension is represented by only one dimension and is not further normalized.

Dimension Table contains set of attribute that are used to analyze the data.

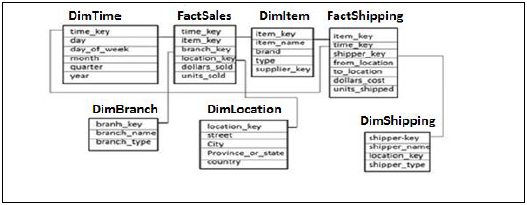

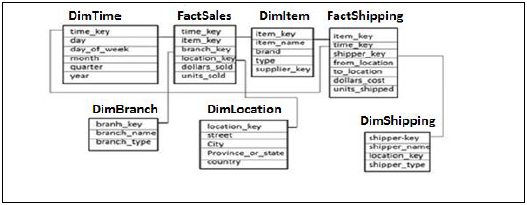

Example − In example given below, we have a Fact table FactSales that has Primary keys for all the Dim tables and measures units_sold and dollars_ sold to do analysis.

We have four Dimension tables − DimTime, DimItem, DimBranch, DimLocation

Each Dimension table is connected to Fact table as Fact table has Primary Key for each Dimension Tables that is used to join two tables.

Facts/Measures in Fact Table are used for analysis purpose along with attribute in Dimension tables.

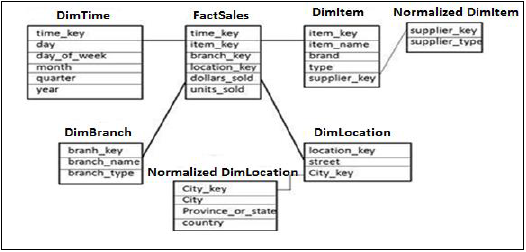

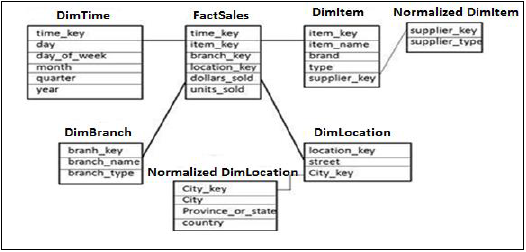

Snowflakes Schema

In Snowflakes schema, some of Dimension tables are further, normalized and Dim tables are connected to single Fact Table. Normalization is used to organize attributes and tables of database to minimize the data redundancy.

Normalization involves breaking a table into less redundant smaller tables without losing any information and smaller tables are joined to Dimension table.

In the above example, DimItem and DimLocation Dimension tables are normalized without losing any information. This is called Snowflakes schema where dimension tables are further normalized to smaller tables.

Galaxy Schema

In Galaxy Schema, there are multiple Fact tables and Dimension tables. Each Fact table stores primary keys of few Dimension tables and measures/facts to do analysis.

In the above example, there are two Fact tables FactSales, FactShipping and multiple Dimension tables joined to Fact tables. Each Fact table contains Primary Key for joined Dim tables and measures/Facts to perform analysis.

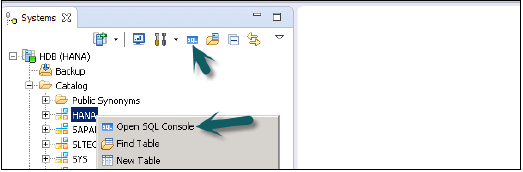

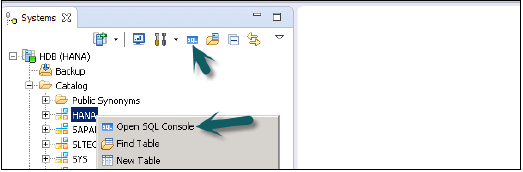

SAP HANA - Tables

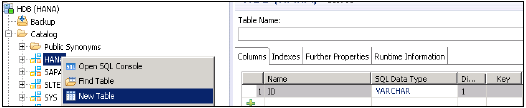

Tables in HANA database can be accessed from HANA Studio in Catalogue tab under Schemas. New tables can be created using the two methods given below −

- Using SQL editor

- Using GUI option

SQL Editor in HANA Studio

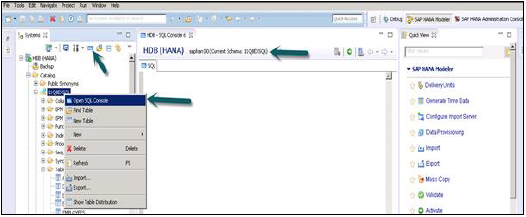

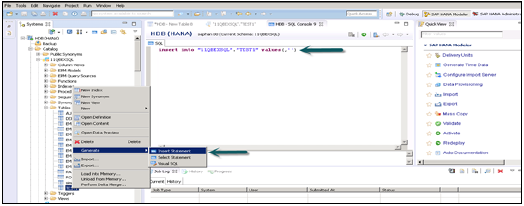

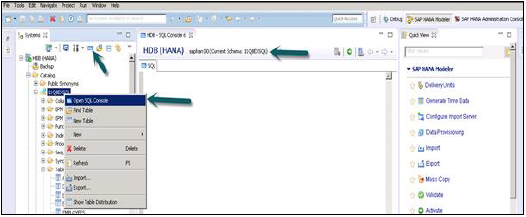

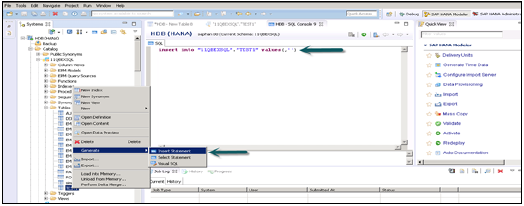

SQL Console can be opened by selecting Schema name, in which, new table has to be created using System View SQL Editor option or by Right click on Schema name as shown below −

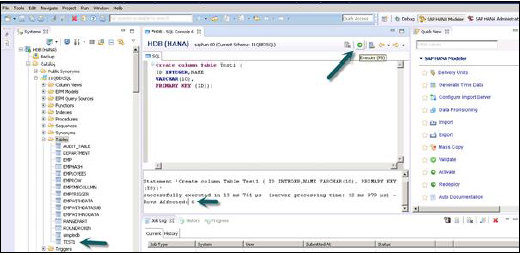

Once SQL Editor is opened, Schema name can be confirmed from the name written on the top of SQL Editor. New table can be created using SQL Create Table statement −

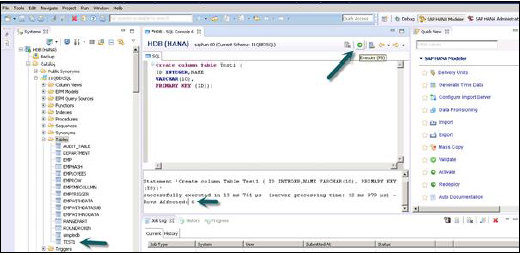

Create column Table Test1 (

ID INTEGER,

NAME VARCHAR(10),

PRIMARY KEY (ID)

);

In this SQL statement, we have created a Column table “Test1”, defined data types of table and Primary Key.

Once you write Create table SQL query, click on Execute option on top of SQL editor right side. Once the statement is executed, we will get a confirmation message as shown in snapshot given below −

Statement 'Create column Table Test1 (ID INTEGER,NAME VARCHAR(10), PRIMARY KEY (ID))'

successfully executed in 13 ms 761 μs (server processing time: 12 ms 979 μs) − Rows Affected: 0

Execution statement also tells about the time taken to execute the statement. Once statement is successfully executed, right click on Table tab under Schema name in System View and refresh. New Table will be reflected in the list of tables under Schema name.

Insert statement is used to enter the data in the Table using SQL editor.

Insert into TEST1 Values (1,'ABCD')

Insert into TEST1 Values (2,'EFGH');

Click on Execute.

You can right click on Table name and use Open Data Definition to see data type of the table. Open Data Preview/Open Content to see table contents.

Creating Table using GUI Option

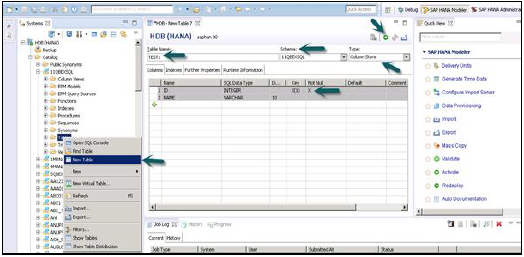

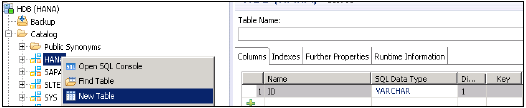

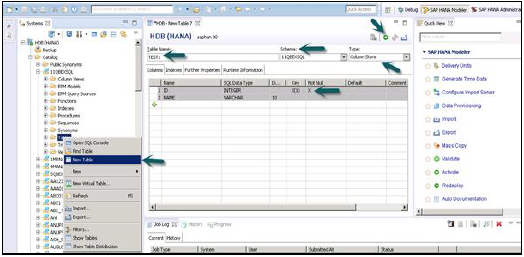

Another way to create a table in HANA database is by using GUI option in HANA Studio.

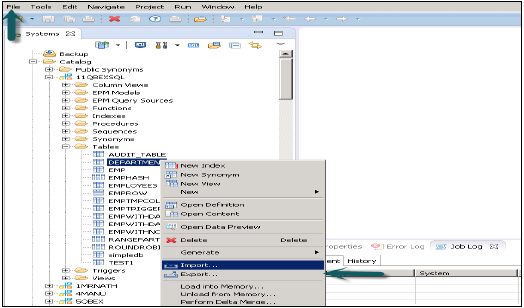

Right Click on Table tab under Schema → Select ‘New Table’ option as shown in snapshot given below.

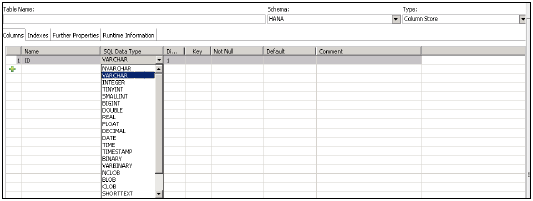

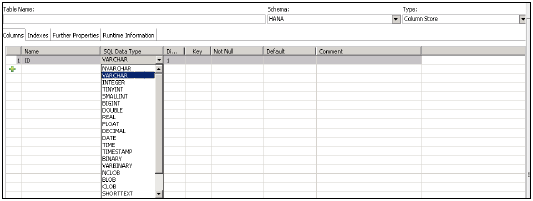

Once you click on New Table → It will open a window to enter the Table name, Choose Schema name from drop down, Define Table type from drop down list: Column Store or Row Store.

Define data type as shown below. Columns can be added by clicking on + sign, Primary Key can be chosen by clicking on cell under Primary key in front of Column name, Not Null will be active by default.

Once columns are added, click on Execute.

Once you Execute (F8), Right Click on Table Tab → Refresh. New Table will be reflected in the list of tables under chosen Schema. Below Insert Option can be used to insert data in table. Select statement to see content of table.

Inserting Data in a table using GUI in HANA studio

You can right click on Table name and use Open Data Definition to see data type of the table. Open Data Preview/Open Content to see table contents.

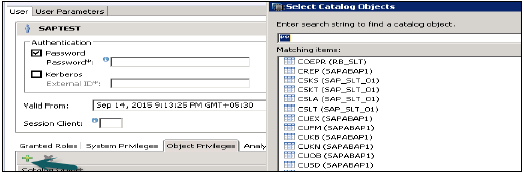

To use tables from one Schema to create views we should provide access on the Schema to the default user who runs all the Views in HANA Modeling. This can be done by going to SQL editor and running this query −

GRANT SELECT ON SCHEMA "<SCHEMA_NAME>" TO _SYS_REPO WITH GRANT OPTION

SAP HANA - Packages

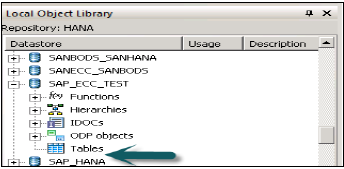

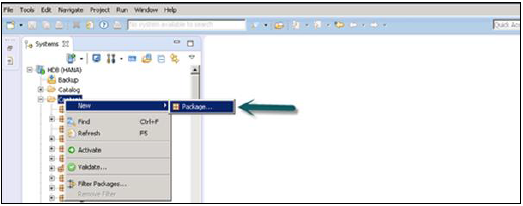

SAP HANA Packages are shown under Content tab in HANA studio. All HANA modeling is saved inside Packages.

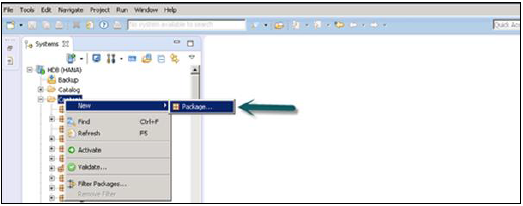

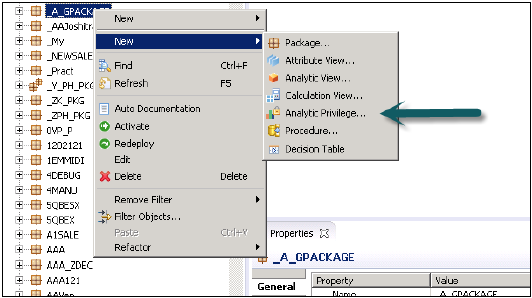

You can create a new Package by Right Click on Content Tab → New → Package

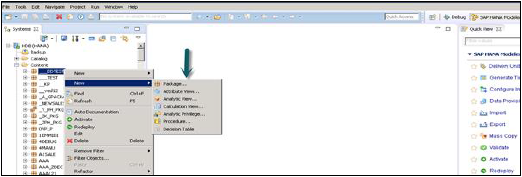

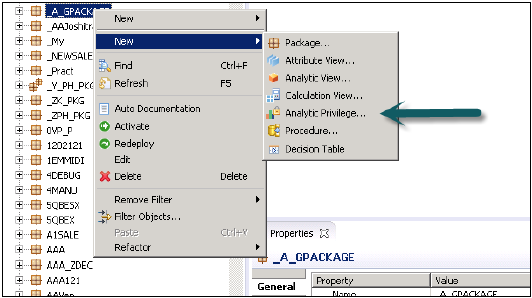

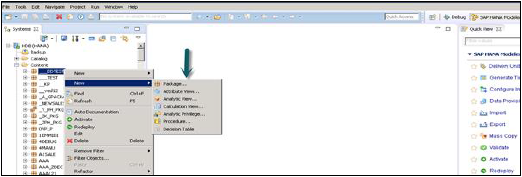

You can also create a Sub Package under a Package by right clicking on the Package name. When we right click on the Package we get 7 Options: We can create HANA Views Attribute Views, Analytical Views, and Calculation Views under a Package.

You can also create Decision Table, Define Analytic Privilege and create Procedures in a Package.

When you right click on Package and click on New, you can also create sub packages in a Package. You have to enter Package Name, Description while creating a Package.

SAP HANA - Attribute View

Attribute Views in SAP HANA Modeling are created on the top of Dimension tables. They are used to join Dimension tables or other Attribute Views. You can also copy a new Attribute View from already existing Attribute Views inside other Packages but that doesn’t let you change the View Attributes.

Characteristics of Attribute View

Attribute Views in HANA are used to join Dimension tables or other Attribute Views.

Attribute Views are used in Analytical and Calculation Views for analysis to pass master data.

They are similar to Characteristics in BM and contain master data.

Attribute Views are used for performance optimization in large size Dimension tables, you can limit the number of attributes in an Attribute View which are further used for Reporting and analysis purpose.

Attribute Views are used to model master data to give some context.

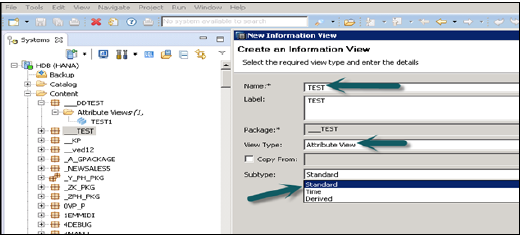

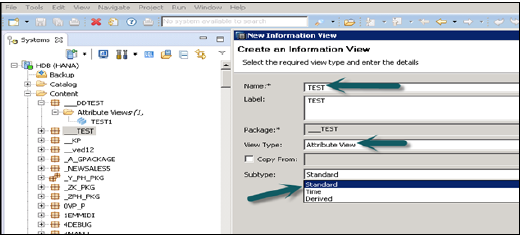

How to Create an Attribute View?

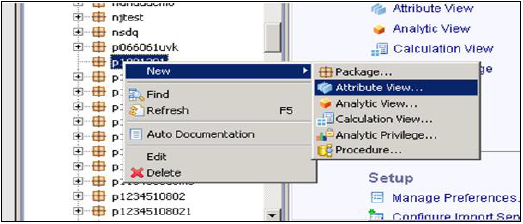

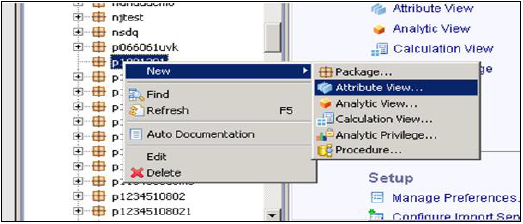

Choose the Package name under which you want to create an Attribute View. Right Click on Package → Go to New → Attribute View

When you click on Attribute View, New Window will open. Enter Attribute View name and description. From the drop down list, choose View Type and sub type. In sub type, there are three types of Attribute views − Standard, Time, and Derived.

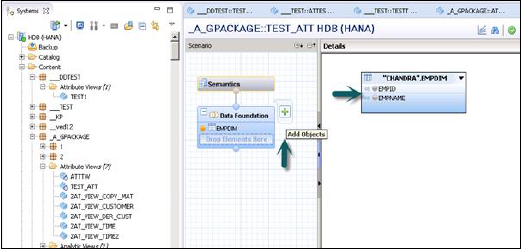

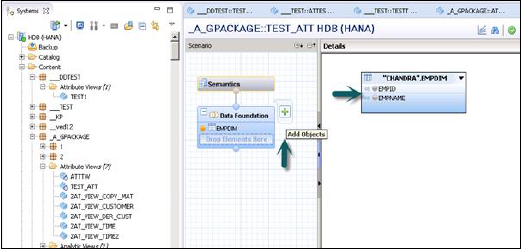

Time subtype Attribute View is a special type of Attribute view that adds a Time Dimension to Data Foundation. When you enter the Attribute name, Type and Subtype and click on Finish, it will open three work panes −

Scenario pane that has Data Foundation and Semantic Layer.

Details Pane shows attribute of all tables added to Data Foundation and joining between them.

Output pane where we can add attributes from Detail pane to filter in the report.

You can add Objects to Data Foundation, by clicking on ‘+’ sign written next to Data Foundation. You can add multiple Dimension tables and Attribute Views in the Scenario Pane and join them using a Primary Key.

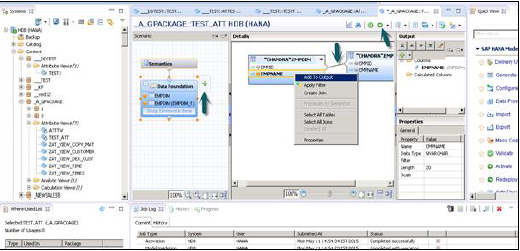

When you click on Add Object in Data Foundation, you will get a search bar from where you can add Dimension tables and Attribute views to Scenario Pane. Once Tables or Attribute Views are added to Data Foundation, they can be joined using a Primary Key in Details Pane as shown below.

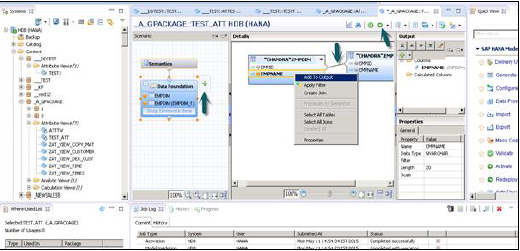

Once joining is done, choose multiple attributes in details pane, right click and Add to Output. All columns will be added to Output pane. Now Click on Activate option and you will get a confirmation message in job log.

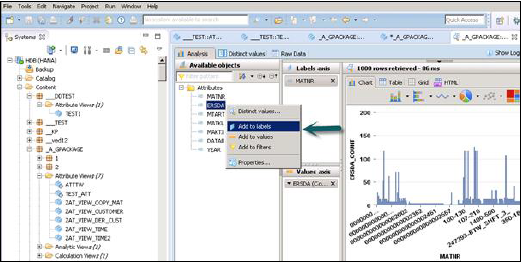

Now you can right click on the Attribute View and go for Data Preview.

Note − When a View is not activated, it has diamond mark on it. However, once you activate it, that diamond disappears that confirms that View has been activated successfully.

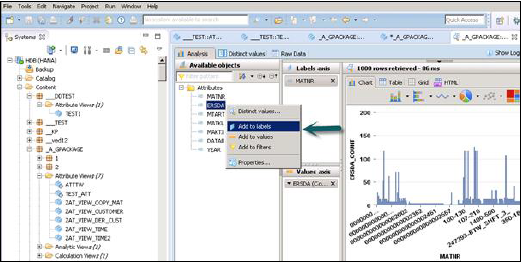

Once you click on Data Preview, it will show all the attributes that has been added to Output pane under Available Objects.

These Objects can be added to Labels and Value axis by right click and adding or by dragging the objects as shown below −

SAP HANA - Analytic View

Analytic View is in the form of Star schema, wherein we join one Fact table to multiple Dimension tables. Analytic views use real power of SAP HANA to perform complex calculations and aggregate functions by joining tables in form of star schema and by executing Star schema queries.

Characteristics of Analytic View

Following are the properties of SAP HANA Analytic View −

Analytic Views are used to perform complex calculations and Aggregate functions like Sum, Count, Min, Max, Etc.

Analytic Views are designed to run Start schema queries.

Each Analytic View has one Fact table surrounded by multiple dimension tables. Fact table contains primary key for each Dim table and measures.

Analytic Views are similar to Info Objects and Info sets of SAP BW.

How to Create an Analytic View?

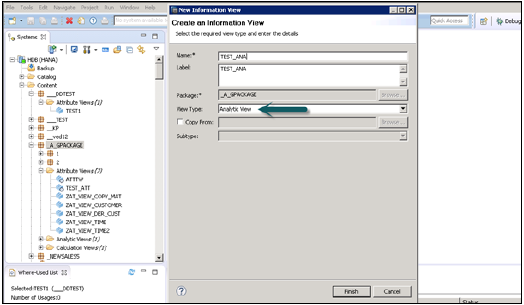

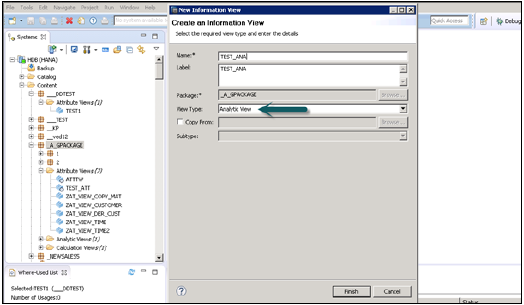

Choose the Package name under which you want to create an Analytic View. Right Click on Package → Go to New → Analytic View. When you click on an Analytic View, New Window will open. Enter View name and Description and from drop down list choose View Type and Finish.

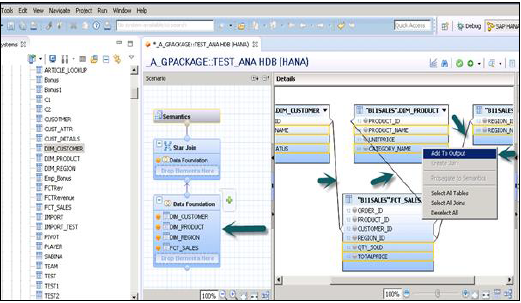

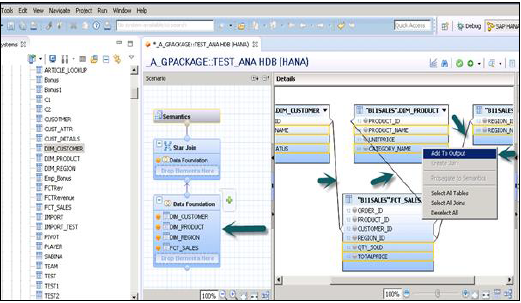

When you click Finish, you can see an Analytic View with Data Foundation and Star Join option.

Click on Data Foundation to add Dimension and Fact tables. Click on Star Join to add Attribute Views.

Add Dim and Fact tables to Data Foundation using “+” sign. In the example given below, 3 dim tables have been added: DIM_CUSTOMER, DIM_PRODUCT, DIM_REGION and 1 Fact table FCT_SALES to Details Pane. Joining Dim table to Fact table using Primary Keys stored in Fact table.

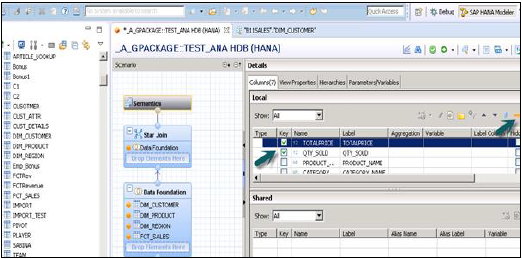

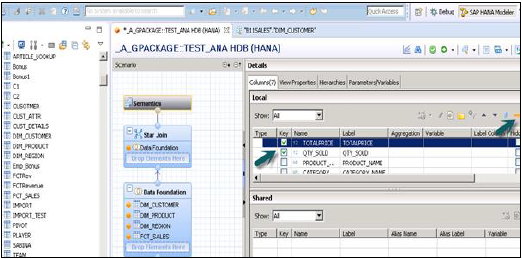

Select Attributes from Dim and Fact table to add to Output pane as shown in snapshot shown above. Now change the data type of Facts, from fact table to measures.

Click on Semantic layer, choose facts and click on measures sign as shown below to change datatype to measures and Activate the View.

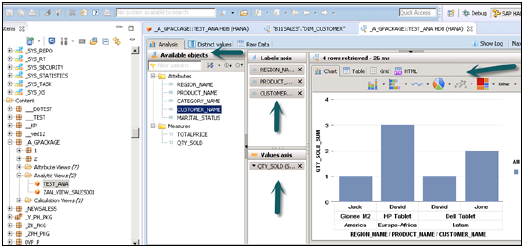

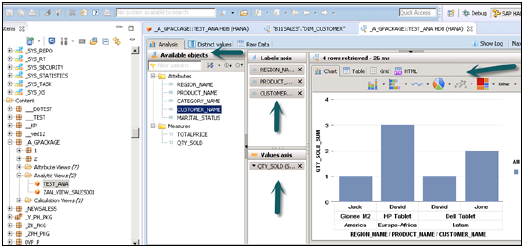

Once you activate view and click on Data Preview, all attributes and measures will be added under the list of Available objects. Add Attributes to Labels Axis and Measure to Value axis for analysis purpose.

There is an option to choose different types of chart and graphs.

SAP HANA - Calculation View

Calculation Views are used to consume other Analytic, Attribute and other Calculation views and base column tables. These are used to perform complex calculations, which are not possible with other type of Views.

Characteristics of Calculation View

Below given are few characteristics of Calculation Views −

Calculation Views are used to consume Analytic, Attribute and other Calculation Views.

They are used to perform complex calculations, which are not possible with other Views.

There are two ways to create Calculation Views- SQL Editor or Graphical Editor.

Built-in Union, Join, Projection & Aggregation nodes.

How to create a Calculation View?

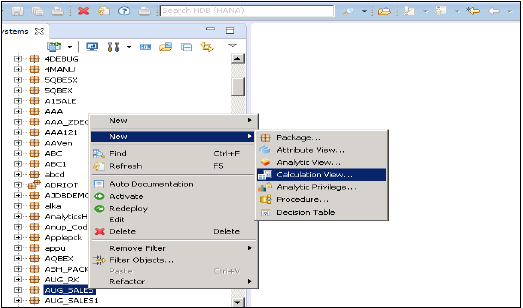

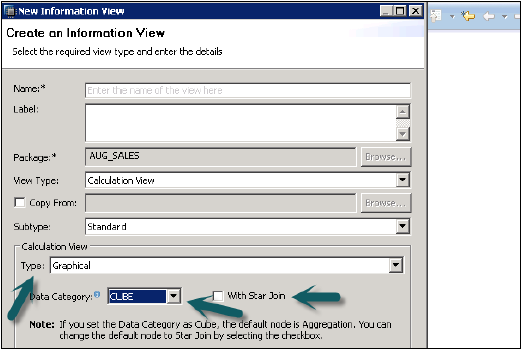

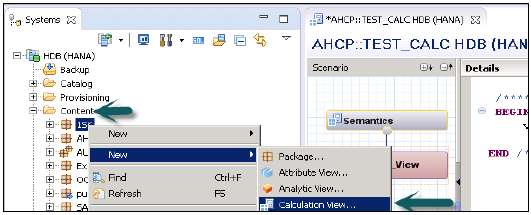

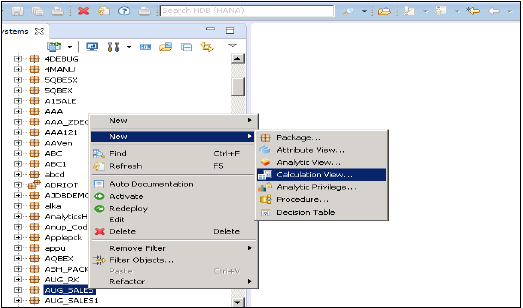

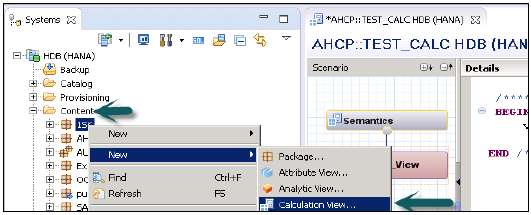

Choose the Package name under which you want to create a Calculation View. Right Click on Package → Go to New → Calculation View. When you click on Calculation View, New Window will open.

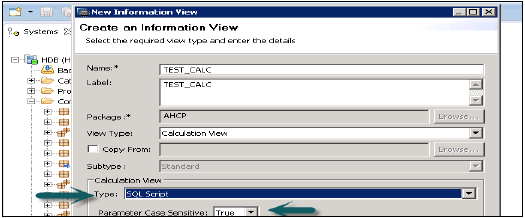

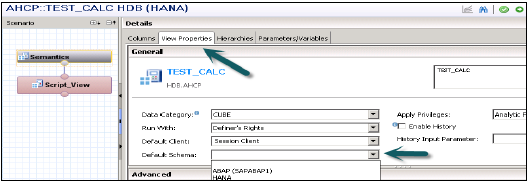

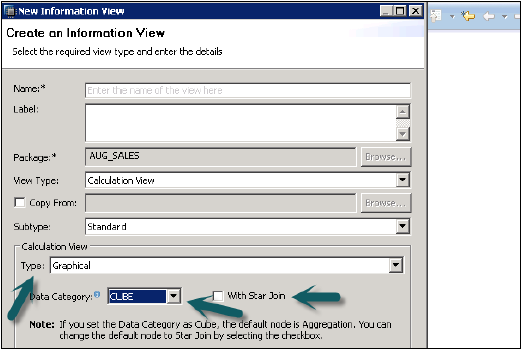

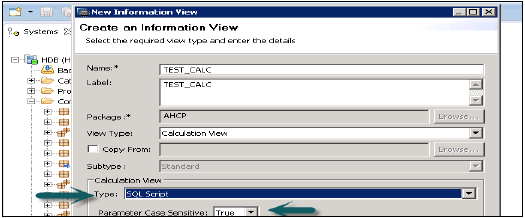

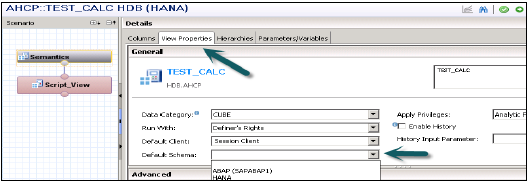

Enter View name, Description and choose view type as Calculation View, Subtype Standard or Time (this is special kind of View which adds time dimension). You can use two types of Calculation View − Graphical and SQL Script.

Graphical Calculation Views

It has default nodes like aggregation, Projection, Join and Union. It is used to consume other Attribute, Analytic and other Calculation views.

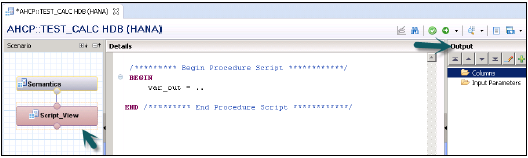

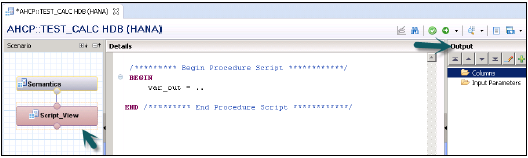

SQL Script based Calculation Views

It is written in SQL scripts that are built on SQL commands or HANA defined functions.

Data Category

Cube, in this default node, is Aggregation. You can choose Star join with Cube dimension.

Dimension, in this default node is Projection.

Calculation View with Star Join

It does not allow base column tables, Attribute Views or Analytic views to add at data foundation. All Dimension tables must be changed to Dimension Calculation views to use in Star Join. All Fact tables can be added and can use default nodes in Calculation View.

Example

The following example shows how we can use Calculation View with Star join −

You have four tables, two Dim tables, and two Fact tables. You have to find list of all employees with their Joining date, Emp Name, empId, Salary and Bonus.

Copy and paste the below script in SQL editor and execute.

Dim Tables − Empdim and Empdate

Create column table Empdim (empId nvarchar(3),Empname nvarchar(100));

Insert into Empdim values('AA1','John');

Insert into Empdim values('BB1','Anand');

Insert into Empdim values('CC1','Jason');

Create column table Empdate (caldate date, CALMONTH nvarchar(4) ,CALYEAR nvarchar(4));

Insert into Empdate values('20100101','04','2010');

Insert into Empdate values('20110101','05','2011');

Insert into Empdate values('20120101','06','2012');

Fact Tables − Empfact1, Empfact2

Create column table Empfact1 (empId nvarchar(3), Empdate date, Sal integer );

Insert into Empfact1 values('AA1','20100101',5000);

Insert into Empfact1 values('BB1','20110101',10000);

Insert into Empfact1 values('CC1','20120101',12000);

Create column table Empfact2 (empId nvarchar(3), deptName nvarchar(20), Bonus integer );

Insert into Empfact2 values ('AA1','SAP', 2000);

Insert into Empfact2 values ('BB1','Oracle', 2500);

Insert into Empfact2 values ('CC1','JAVA', 1500);

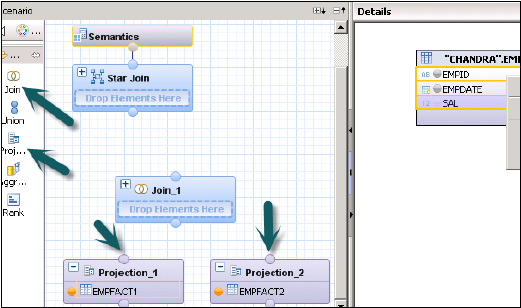

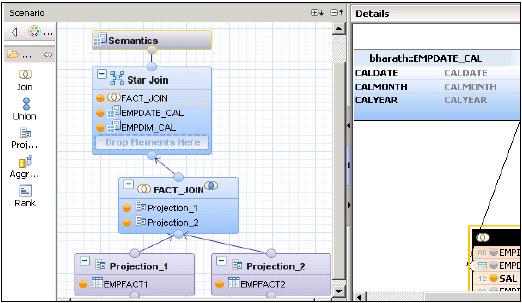

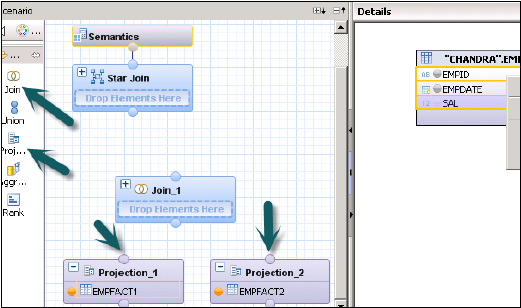

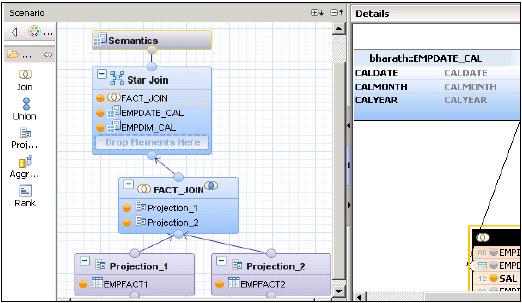

Now we have to implement Calculation View with Star Join. First change both Dim tables to Dimension Calculation View.

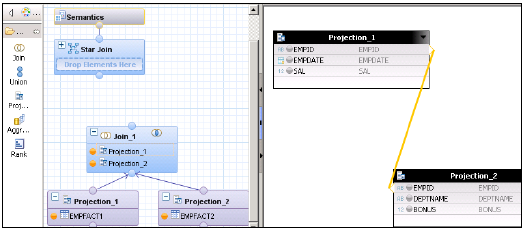

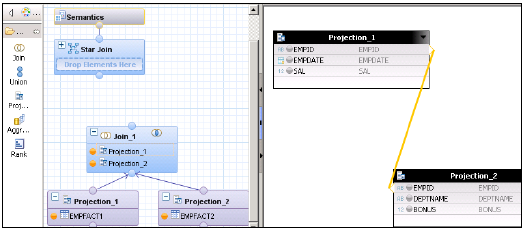

Create a Calculation View with Star Join. In Graphical pane, add 2 Projections for 2 Fact tables. Add both fact tables to both Projections and add attributes of these Projections to Output pane.

Add a join from default node and join both the fact tables. Add parameters of Fact Join to output pane.

In Star Join, add both- Dimension Calculation views and add Fact Join to Star Join as shown below. Choose parameters in Output pane and active the View.

SAP HANA Calculation View − Star Join

Once view is activated successfully, right click on view name and click on Data Preview. Add attributes and measures to values and labels axis and do the analysis.

Benefits of using Star Join

It simplifies the design process. You need not to create Analytical views and Attribute Views and directly Fact tables can be used as Projections.

3NF is possible with Star Join.

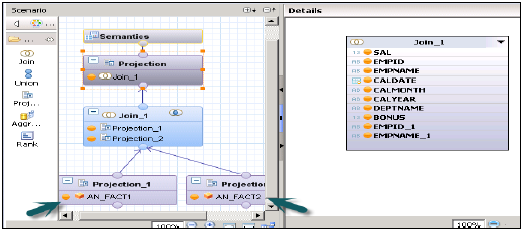

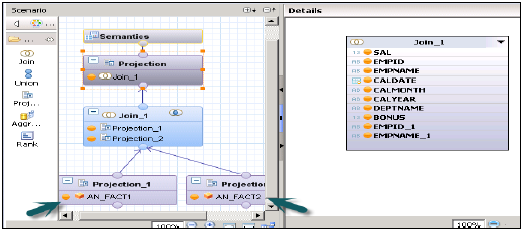

Calculation View without Star Join

Create 2 Attribute Views on 2 Dim tables-Add output and activate both the views.

Create 2 Analytical Views on Fact Tables → Add both Attribute views and Fact1/Fact2 at Data Foundation in Analytic view.

Now Create a Calculation View → Dimension (Projection). Create Projections of both Analytical Views and Join them. Add attributes of this Join to output pane. Now Join to Projection and add output again.

Activate the view successful and go to Data preview for analysis.

SAP HANA - Analytic Privileges

Analytic Privileges are used to limit access on HANA Information views. You can assign different types of right to different users on different component of a View in Analytic Privileges.

Sometimes, it is required that data in the same view should not be accessible to other users who do not have any relevant requirement for that data.

Example

Suppose you have an Analytic view EmpDetails that has details about employees of a company- Emp name, Emp Id, Dept, Salary, Date of Joining, Emp logon, etc. Now if you do not want your Report developer to see Salary details or Emp logon details of all employees, you can hide this by using Analytic privileges option.

Analytic Privileges are only applied to attributes in an Information View. We cannot add measures to restrict access in Analytic privileges.

Analytic Privileges are used to control read access on SAP HANA Information views.

So we can restrict data by Empname, EmpId, Emp logon or by Emp Dept and not by numerical values like salary, bonus.

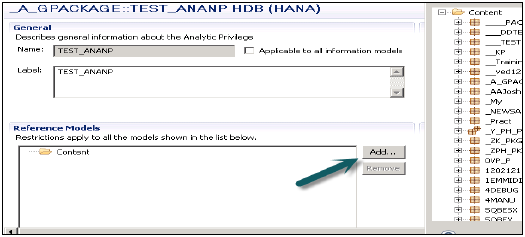

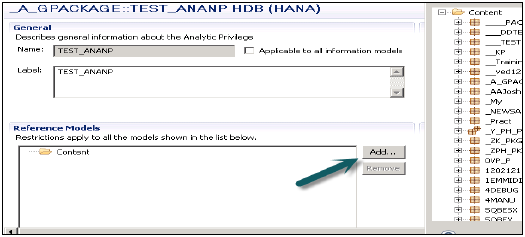

Creating Analytic Privileges

Right Click on Package name and go to new Analytic Privilege or you can open using HANA Modeler quick launch.

Enter name and Description of Analytic Privilege → Finish. New window will open.

You can click on Next button and add Modeling view in this window before you click on finish. There is also an option to copy an existing Analytic Privilege package.

Once you click on Add button, it will show you all the views under Content tab.

Choose View that you want to add to Analytic Privilege package and click OK. Selected View will be added under reference models.

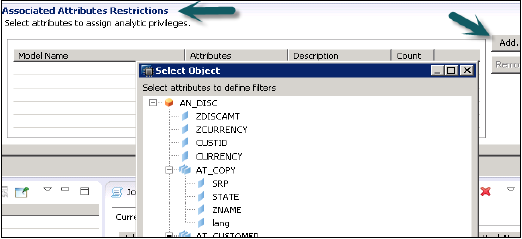

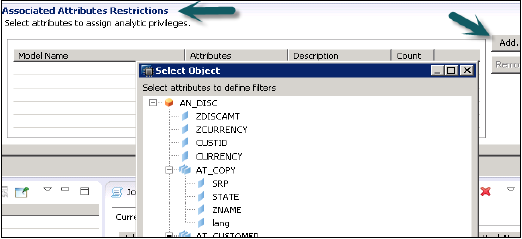

Now to add attributes from selected view under Analytic Privilege, click on add button with Associated Attributes Restrictions window.

Add objects you want to add to Analytic privileges from select object option and click on OK.

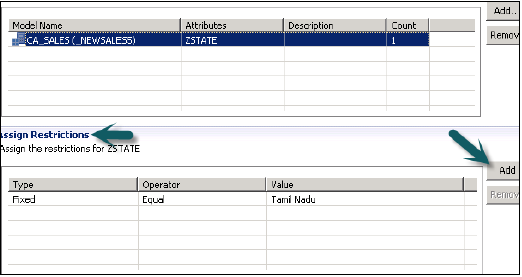

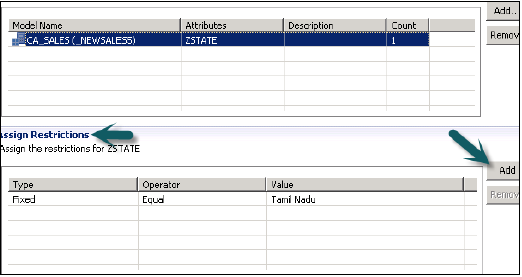

In Assign Restriction option, it allows you to add values you want to hide in Modeling View from specific user. You can add Object value that will not reflect in Data Preview of Modeling View.

We have to activate Analytic Privilege now, by clicking on Green round icon at top. Status message – completed successfully confirms activation successfully under job log and we can use this view now by adding to a role.

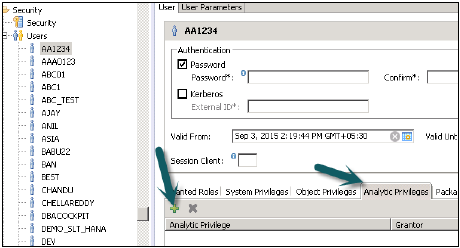

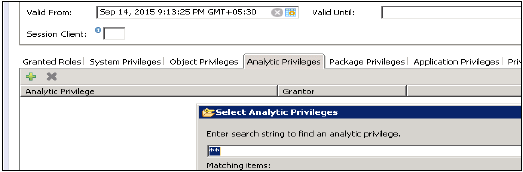

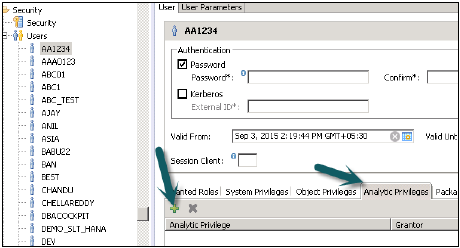

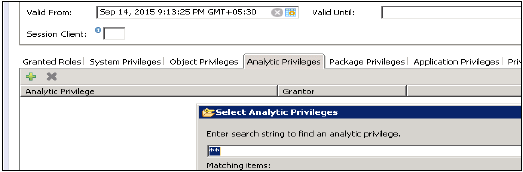

Now to add this role to a user, go to security tab → User → Select User on which you want to apply these Analytic privileges.

Search Analytic Privilege you want to apply with the name and click on OK. That view will be added to user role under Analytic Privileges.

To delete Analytic Privileges from specific user, select view under tab and use Red delete option. Use Deploy (arrow mark at top or F8 to apply this to user profile).

SAP HANA - Information Composer

SAP HANA Information Composer is a self-service modeling environment for end users to analyze data set. It allows you to import data from workbook format (.xls, .csv) into HANA database and to create Modeling views for analysis.

Information Composer is very different from HANA Modeler and both are designed to target separate set of users. Technically sound people who have strong experience in data modeling use HANA Modeler. A business user, who does not have any technical knowledge, uses Information Composer. It provides simple functionalities with easy to use interface.

Features of Information Composer

Data extraction − Information Composer helps to extract data, clean data, preview data and automate the process of creation of physical table in the HANA database.

Manipulating data − It helps us to combine two objects (Physical tables, Analytical View, attribute view and calculation views) and create information view that can be consumed by SAP BO Tools like SAP Business Objects Analysis, SAP Business Objects Explorer and other tools like MS Excel.

It provides a centralized IT service in the form of URL, which can be accessed from anywhere.

How to upload data using Information Composer?

It allows us to upload large amount of data (up to 5 million cells). Link to access Information Composer −

http://<server>:<port>/IC

Login to SAP HANA Information Composer. You can perform data loading or manipulation using this tool.

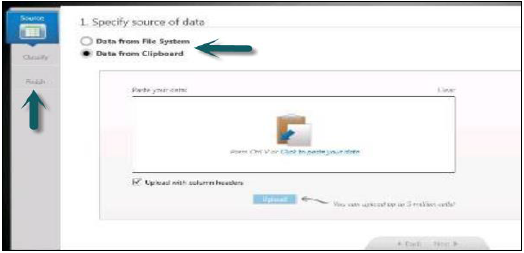

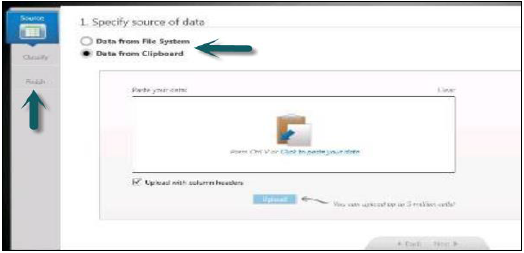

To upload data this can be done in two ways −

- Uploading .xls, .csv file directly to HANA database

- Other way is to copy data to clipboard and copy from there to HANA database.

- It allows data to be loaded along with header.

On Left side in Information Composer, you have three options −

Select Source of data → Classify data → Publish

Once data is published to HANA database, you cannot rename the table. In this case, you have to delete the table from Schema in HANA database.

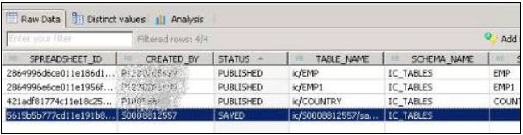

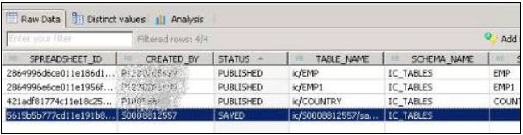

“SAP_IC” schema, where tables like IC_MODELS, IC_SPREADSHEETS exists. One can find details of tables created using IC under these tables.

Using Clipboard

Another way to upload data in IC is by use of the clipboard. Copy the data to clipboard and upload it with help of Information Composer. Information Composer also allows you to see preview of data or even provide summary of data in temporary storage. It has inbuilt capability of data cleansing that is used to remove any inconsistency in data.

Once data is cleansed, you need to classify data whether it is attributed. IC has inbuilt feature to check the data type of uploaded data.

Final step is to publish the data to physical tables in HANA database. Provide a technical name and description of table and this will be loaded inside IC_Tables Schema.

User Roles for using data published with Information Composer

Two set of users can be defined to use data published from IC.

IC_MODELER is for creating physical tables, uploading data and creating information views.

IC_PUBLIC allows users to view information views created by other users. This role does not allow the user to upload or create any information views using IC.

System Requirement for Information Composer

Server Requirements −

At least 2GB of available RAM is required.

Java 6 (64-bit) must be installed on the server.

The Information Composer Server must be physically located next to the HANA server.

Client Requirements −

- Internet Explorer with Silverlight 4 installed.

SAP HANA - Export & Import

HANA Export and Import option allows tables, Information models, Landscapes to move to a different or existing system. You do not need to recreate all tables and information models as you can simply export it to new system or import to an existing target system to reduce the effort.

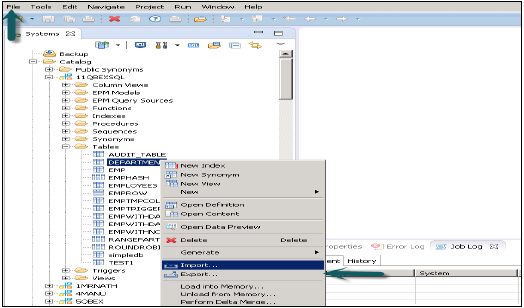

This option can be accessed from File menu at the top or by right clicking on any table or Information model in HANA studio.

Exporting a table/Information model in HANA Studio

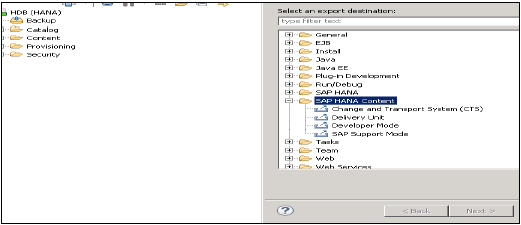

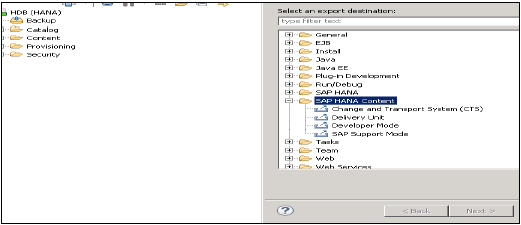

Go to file menu → Export → You will see options as shown below −

Export Options under SAP HANA Content

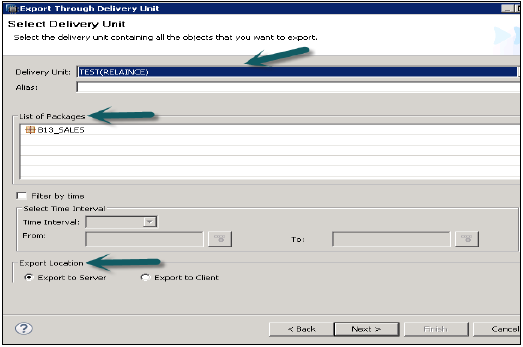

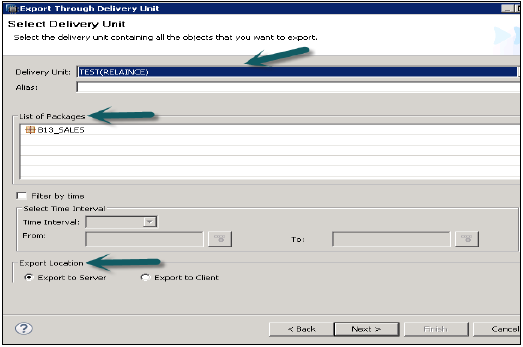

Delivery Unit

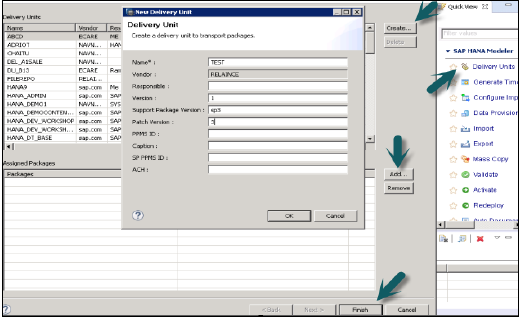

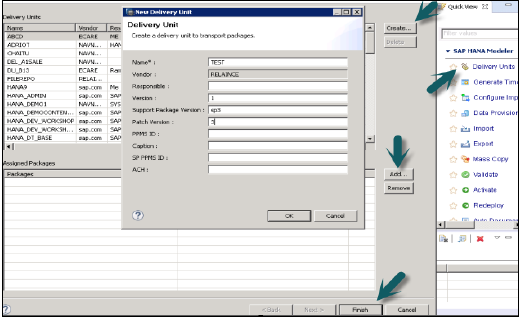

Delivery unit is a single unit, which can be mapped to multiple packages and can be exported as single entity so that all the packages assigned to Delivery Unit can be treated as single unit.

Users can use this option to export all the packages that make a delivery unit and the relevant objects contained in it to a HANA Server or to local Client location.

The user should create Delivery Unit prior to using it.

This can be done through HANA Modeler → Delivery Unit → Select System and Next → Create → Fill the details like Name, Version, etc. → OK → Add Packages to Delivery unit → Finish

Once the Delivery Unit is created and the packages are assigned to it, user can see the list of packages by using Export option −

Go to File → Export → Delivery Unit →Select the Delivery Unit.

You can see list of all packages assigned to Delivery unit. It gives an option to choose export location −

- Export to Server

- Export to Client

You can export the Delivery Unit either to HANA Server location or to a Client location as shown.

The user can restrict the export through “Filter by time” which means Information views, which are updated within the mentioned time interval will only be exported.

Select the Delivery Unit and Export Location and then Click Next → Finish. This will export the selected Delivery Unit to the specified location.

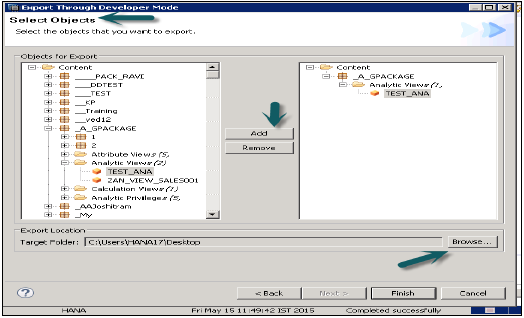

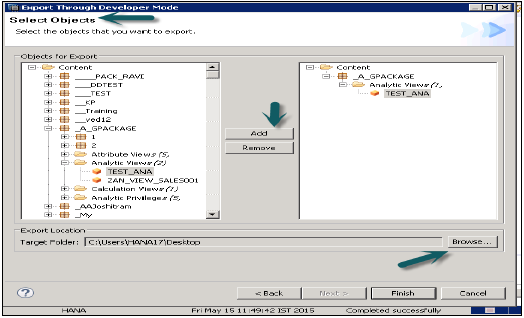

Developer Mode

This option can be used to export individual objects to a location in the local system. User can select single Information view or group of Views and Packages and select the local Client location for export and Finish.

This is shown in the snapshot below.

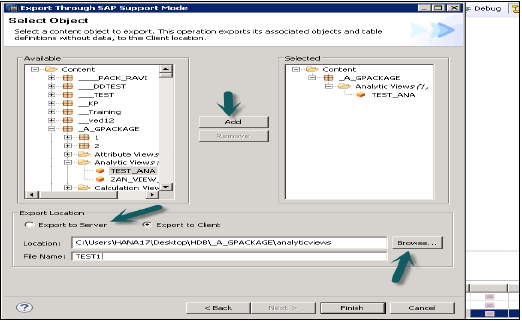

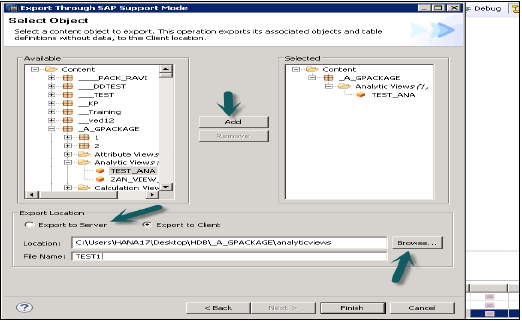

Support Mode

This can be used to export the objects along with the data for SAP support purposes. This can be used when requested.

Example − User creates an Information View, which throws an error and he is not able to resolve. In that case, he can use this option to export the view along with data and share it with SAP for debugging purpose.

Export Options under SAP HANA Studio −

Landscape − To export the landscape from one system to other.

Tables − This option can be used to export tables along with its content.

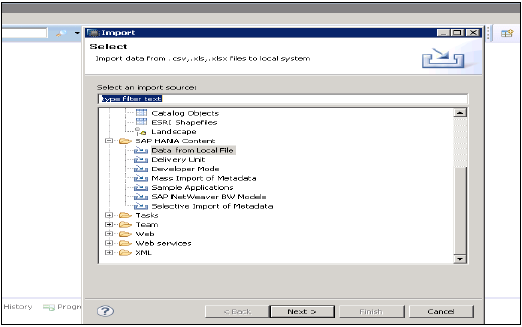

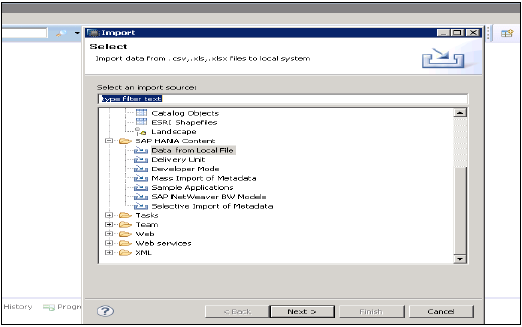

Import Option under SAP HANA Content

Go to File → Import, You will see all the options as shown below under Import.

Data from Local File

This is used to import data from a flat file like .xls or .csv file.

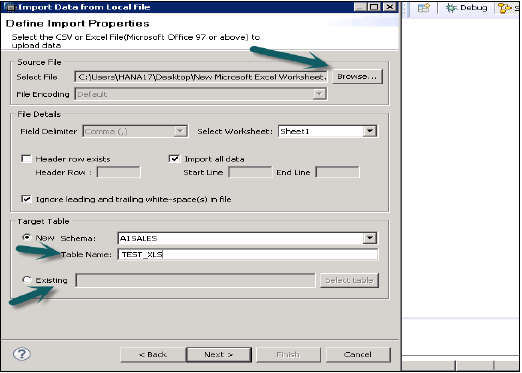

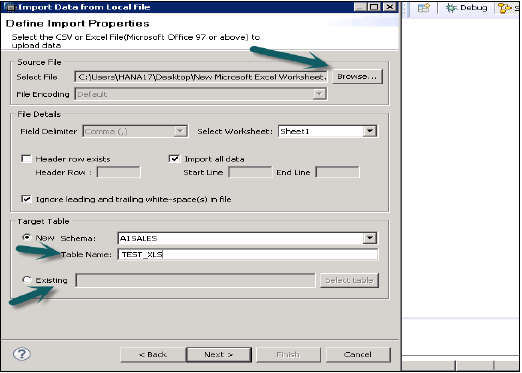

Click on Nex → Choose Target System → Define Import Properties

Select Source file by browsing local system. It also gives an option if you want to keep the header row. It also gives an option to create a new table under existing Schema or if you want to import data from a file to an existing table.

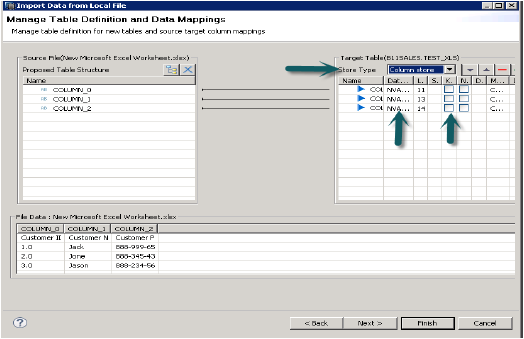

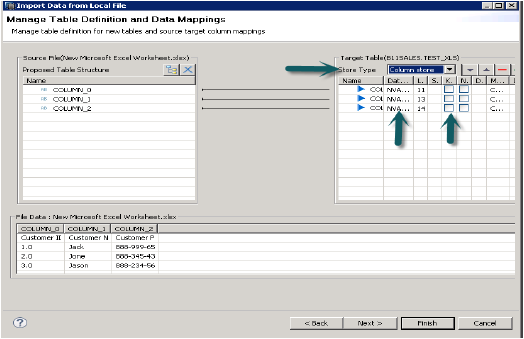

When you click on Next, it gives an option to define Primary Key, change data type of columns, define storage type of table and also, allows you to change the proposed structure of table.

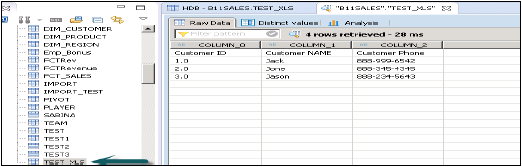

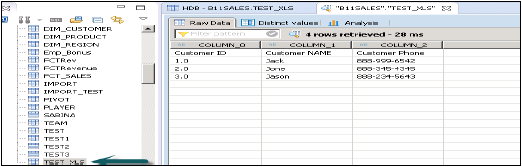

When you click on finish, that table will be populated under list of tables in mentioned Schema. You can do the data preview and can check data definition of the table and it will be same as that of .xls file.

Delivery Unit

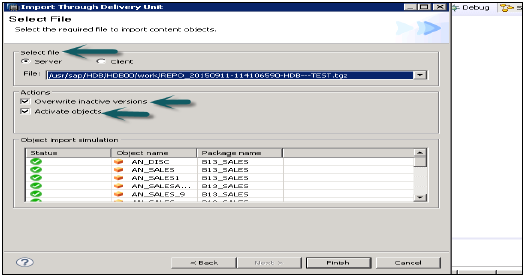

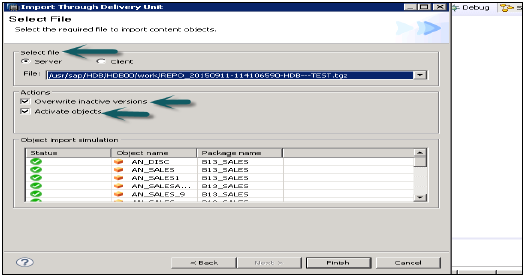

Select Delivery unit by going to File → Import → Delivery unit. You can choose from a server or local client.

You can select “Overwrite inactive versions” which allows you to overwrite any inactive version of objects that exist. If the user selects “Activate objects”, then after the import, all the imported objects will be activated by default. The user need not trigger the activation manually for the imported views.

Click Finish and once completed successfully, it will be populated to target system.

Developer Mode

Browse for the Local Client location where the views are exported and select the views to be imported, the user can select individual Views or group of Views and Packages and Click on Finish.

Mass Import of Metadata

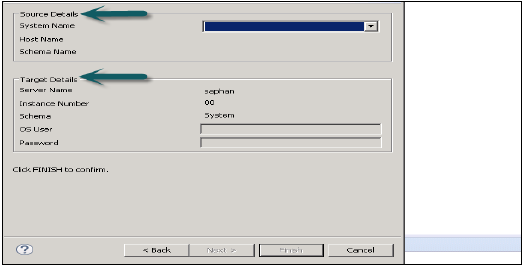

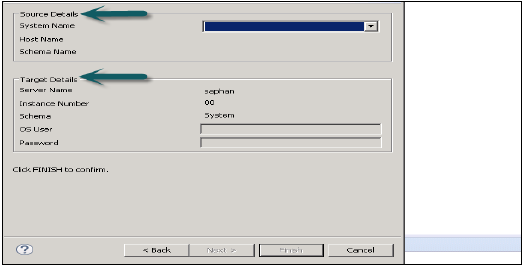

Go to File → Import → Mass Import of Metadata → Next and select the source and target system.

Configure the System for Mass Import and click Finish.

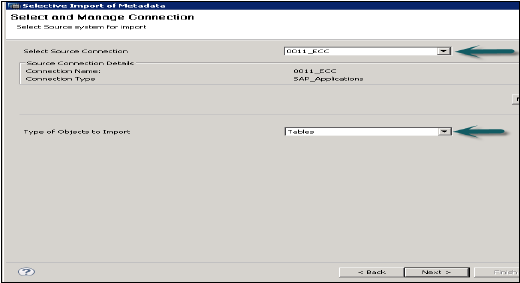

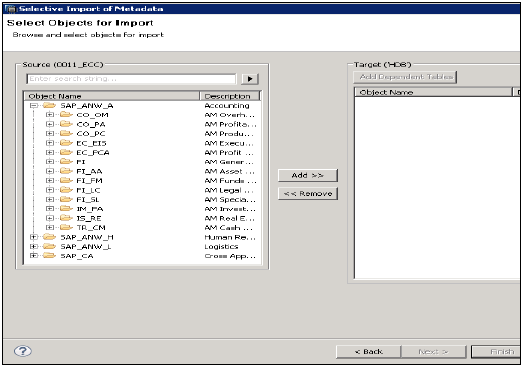

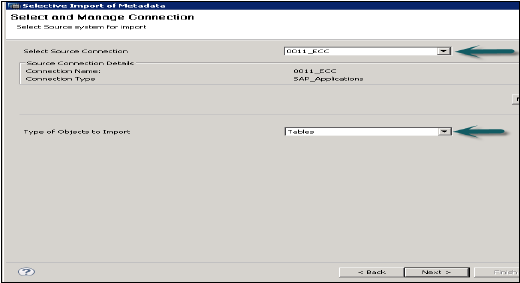

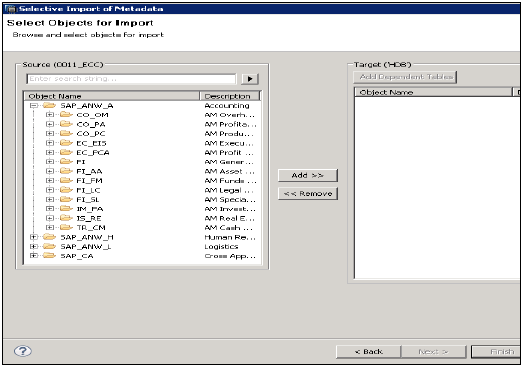

Selective Import of Metadata

It allows you to choose tables and target schema to import Meta data from SAP Applications.

Go to File → Import → Selective Import of Metadata → Next

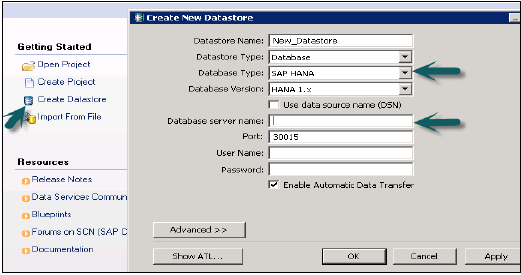

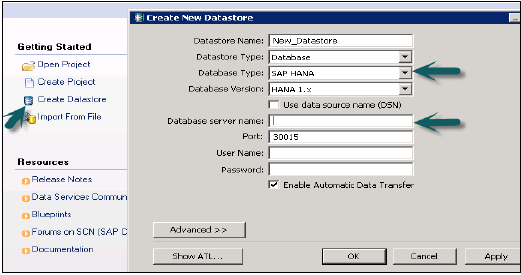

Choose Source Connection of type “SAP Applications”. Remember that the Data Store should have been created already of type SAP Applications → Click Next

Select tables you want to import and validate data if required. Click Finish after that.

SAP HANA - Reporting View

We know that with the use of Information Modeling feature in SAP HANA, we can create different Information views Attribute Views, Analytic Views, Calculation views. These Views can be consumed by different reporting tools like SAP Business Object, SAP Lumira, Design Studio, Office Analysis and even third party tool like MS Excel.

These reporting tools enable Business Managers, Analysts, Sales Managers and senior management employees to analyze the historic information to create business scenarios and to decide business strategy of the company.

This generates the need for consuming HANA Modeling views by different reporting tools and to generate reports and dashboards, which are easy to understand for end users.

In most of the companies, where SAP is implemented, reporting on HANA is done with BI platforms tools that consume both SQL and MDX queries with help of Relational and OLAP connections. There is wide variety of BI tools like − Web Intelligence, Crystal Reports, Dashboard, Explorer, Office Analysis and many more.

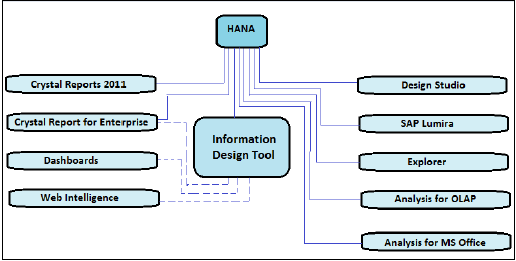

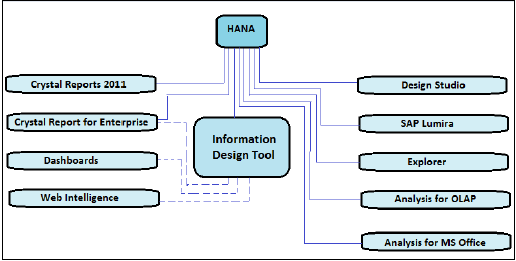

Bi 4.0 Connectivity to Hana Views

Reporting Tools

Web Intelligence and Crystal Reports are most common BI tools that are used for reporting. WebI uses a semantic layer called Universe to connect to data source and these Universes are used for reporting in tool. These Universes are designed with the help of Universe design tool UDT or with Information Design tool IDT. IDT supports multisource enabled data source. However, UDT only supports Single source.

Main tools that are used for designing interactive dashboards- Design Studio and Dashboard Designer. Design Studio is future tool for designing dashboard, which consumes HANA views via BI consumer Service BICS connection. Dashboard design (xcelsius) uses IDT to consume schemas in HANA database with a Relational or OLAP connection.

SAP Lumira has an inbuilt feature of directly connecting or loading data from HANA database. HANA views can be directly consumed in Lumira for visualization and creating stories.

Office Analysis uses an OLAP connection to connect to HANA Information views. This OLAP connection can be created in CMC or IDT.

In the picture given above, it shows all BI tools with solid lines, which can be directly connected and integrated with SAP HANA using an OLAP connection. It also depicts tools, which need a relational connection using IDT to connect to HANA are shown with dotted lines.

Relational vs OLAP Connection

The idea is basically if you need to access data from a table or a conventional database then your connection should be a relational connection but if your source is an application and data is stored in cube (multidimensional like Info cubes, Information models) then you would use an OLAP connection.

- A Relational connection can only be created in IDT/UDT.

- An OLAP can be created in both IDT and CMC.

Another thing to note is that a relational connection always produces a SQL statement to be fired from report while an OLAP connection normally creates a MDX statement

Information Design Tool

In Information design tool (IDT), you can create a relational connection to an SAP HANA view or table using JDBC or ODBC drivers and build a Universe using this connection to provide access to client tools like Dashboards and Web Intelligence as shown in above picture.

You can create a direct connection to SAP HANA using JDBC or ODBC drivers.

SAP HANA - Crystal Reports

Crystal Reports for Enterprise

In Crystal Reports for Enterprise, you can access SAP HANA data by using an existing relational connection created using the information design tool.

You can also connect to SAP HANA using an OLAP connection created using information design tool or CMC.

Design Studio

Design Studio can access SAP HANA data by using an existing OLAP connection created in Information design tool or CMC same like Office Analysis.

Dashboards

Dashboards can connect to SAP HANA only through a relational Universe. Customers using Dashboards on top of SAP HANA should strongly consider building their new dashboards with Design Studio.

Web Intelligence

Web Intelligence can connect to SAP HANA only through a Relational Universe.

SAP Lumira

Lumira can connect directly to SAP HANA Analytic and Calculation views. It can also connect to SAP HANA through SAP BI Platform using a relational Universe.

Office Analysis, edition for OLAP

In Office Analysis edition for OLAP, you can connect to SAP HANA using an OLAP connection defined in the Central Management Console or in Information design tool.

Explorer

You can create an information space based on an SAP HANA view using JDBC drivers.

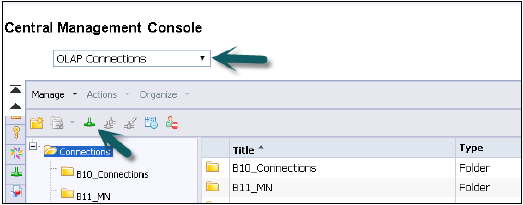

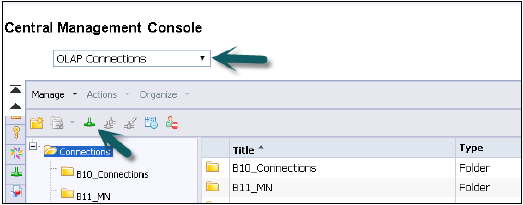

Creating an OLAP Connection in CMC

We can create an OLAP Connection for all the BI tools, which we want to use on top of HANA views like OLAP for analysis, Crystal Report for enterprise, Design Studio. Relational connection through IDT is used to connect Web Intelligence and Dashboards to HANA database.

These connection can be created using IDT as well CMC and both of the connections are saved in BO Repository.

Login to CMC with the user name and password.

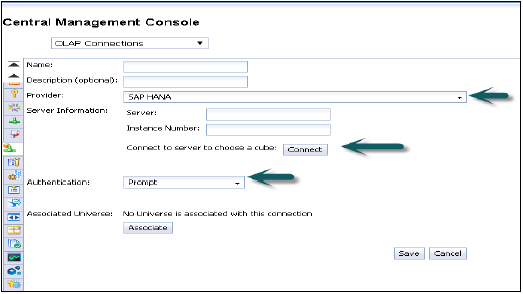

From the dropdown list of connections, choose an OLAP connection. It will also show already created connections in CMC. To create a new connection, go to green icon and click on this.

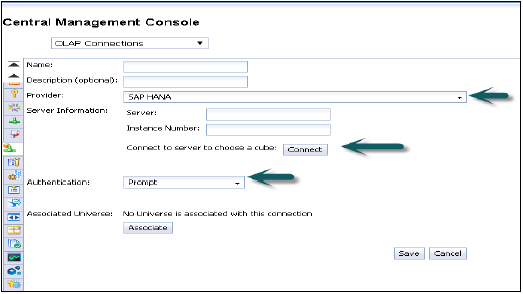

Enter the name of an OLAP connection and description. Multiple persons, to connect to HANA views, in different BI Platform tools, can use this connection.

Provider − SAP HANA

Server − Enter HANA Server name

Instance − Instance number

It also gives an option to connect to a single Cube (You can also choose to connect to single Analytic or Calculation view) or to the full HANA system.

Click on Connect and choose modeling view by entering user name and password.

Authentication Types − Three types of Authentication are possible while creating an OLAP connection in CMC.

Predefined − It will not ask user name and password again while using this connection.

Prompt − Every time it will ask user name and password

SSO − User specific

Enter user − user name and password for HANA system and save and new connection will be added to existing list of connections.

Now open BI Launchpad to open all BI platform tools for reporting like Office Analysis for OLAP and it will ask to choose a connection. By default, it will show you the Information View if you have specified it while creating this connection otherwise click on Next and go to folders → Choose Views (Analytic or Calculation Views).

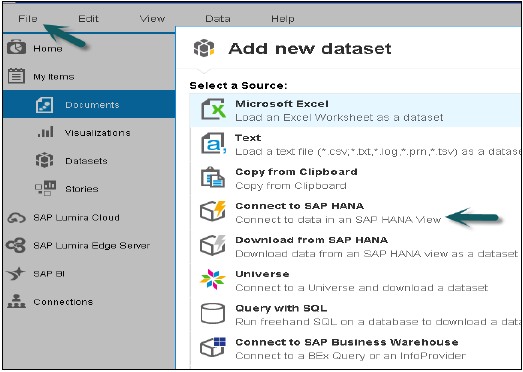

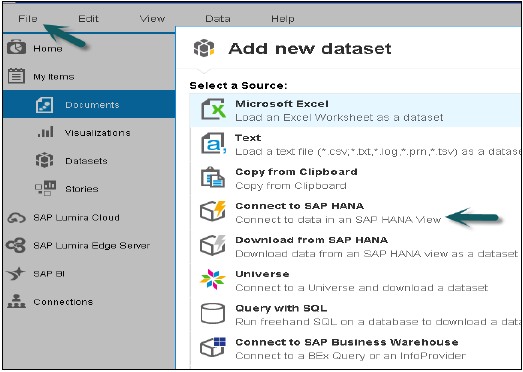

SAP Lumira connectivity with HANA system

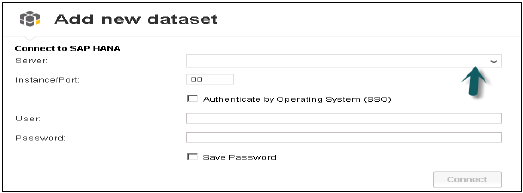

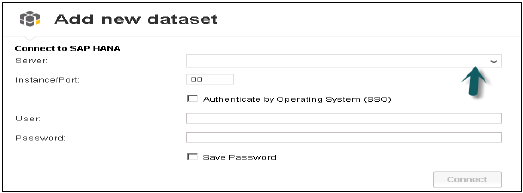

Open SAP Lumira from Start Program, Click on file menu → New → Add new dataset → Connect to SAP HANA → Next

Difference between connect to SAP HANA and download from SAP HANA is that it will download data from Hana system to BO repository and refreshing of data will not occur with changes in HANA system. Enter HANA server name and Instance number. Enter user name and password → click on Connect.

It will show all views. You can search with the view name → Choose View → Next. It will show all measures and dimensions. You can choose from these attributes if you want → click on create option.

There are four tabs inside SAP Lumira −

Prepare − You can see the data and do any custom calculation.

Visualize − You can add Graphs and Charts. Click on X axis and Y axis + sign to add attributes.

Compose − This option can be used to create sequence of Visualization (story) → click on Board to add numbers of boards → create → it will show all the visualizations on left side. Drag first Visualization then add page then add second visualization.

Share − If it is built on SAP HANA, we can only publish to SAP Lumira server. Otherwise you can also publish story from SAP Lumira to SAP Community Network SCN or BI Platform.

Save the file to use it later → Go to File-Save → choose Local → Save

Creating a Relational Connection in IDT to use with HANA views in WebI and Dashboard −

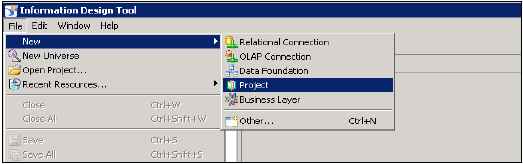

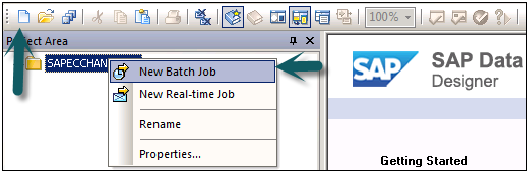

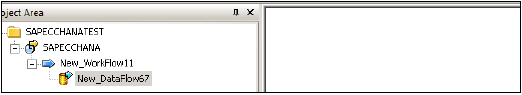

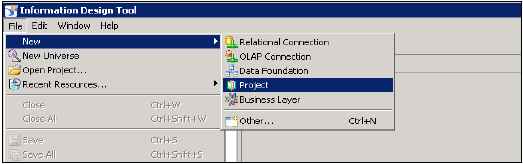

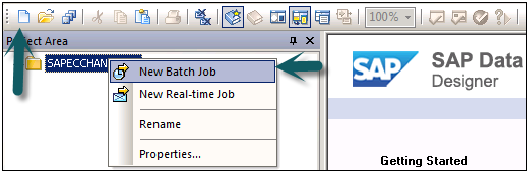

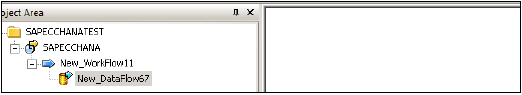

Open Information Design Tool → by going to BI Platform Client tools. Click on New → Project Enter Project Name → Finish.

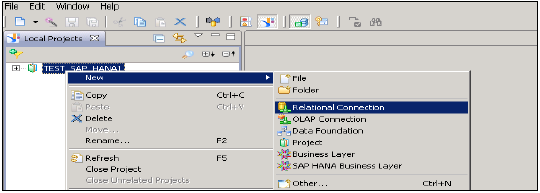

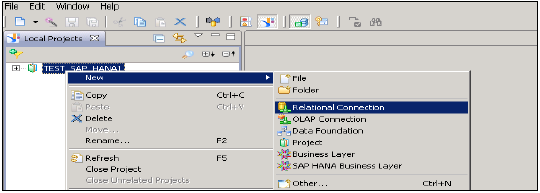

Right-click on Project name → Go to New → Choose Relational Connection → Enter Connection/resource name → Next → choose SAP from list to connect to HANA system → SAP HANA → Select JDBC/ODBC drivers → click on Next → Enter HANA system details → Click on Next and Finish.

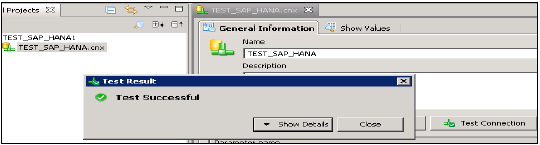

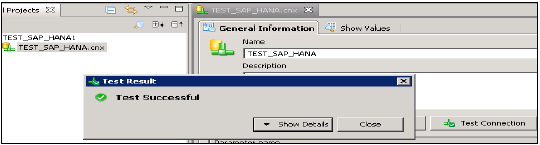

You can also test this connection by clicking on Test Connection option.

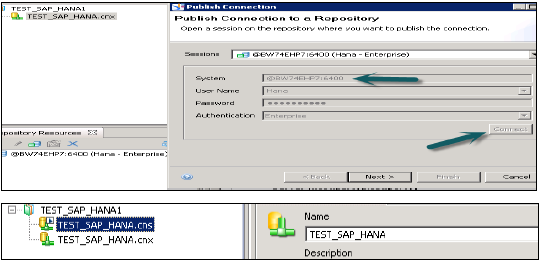

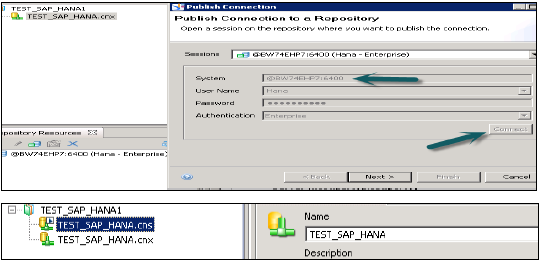

Test Connection → Successful. Next step is to publish this connection to Repository to make it available for use.

Right Click on connection name → click on Publish connection to Repository → Enter BO Repository name and password → Click on Connect → Next →Finish → Yes.

It will create a new relational connection with .cns extension.

.cns − connection type represents secured Repository connection that should be used to create Data foundation.

.cnx − represents local unsecured connection. If you use this connection while creating and publishing a Universe, it will not allow you to publish that to repository.

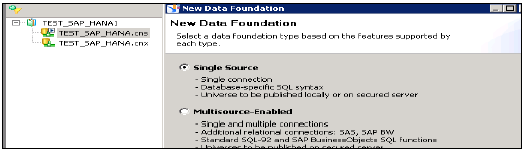

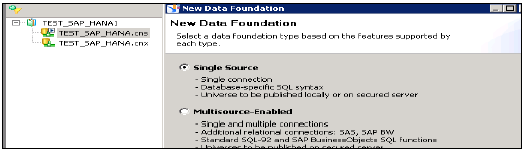

Choose .cns connection type → Right Click on this → click on New Data foundation → Enter Name of Data foundation → Next → Single source/multi source → click on Next → Finish.

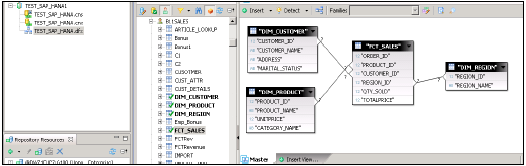

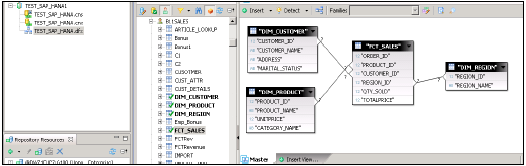

It will show all the tables in HANA database with Schema name in the middle pane.

Import all tables from HANA database to master pane to create a Universe. Join Dim and Fact tables with primary keys in Dim tables to create a Schema.

Double Click on the Joins and detect Cardinality → Detect → OK → Save All at the top. Now we have to create a new Business layer on the data foundation that will be consumed by BI Application tools.

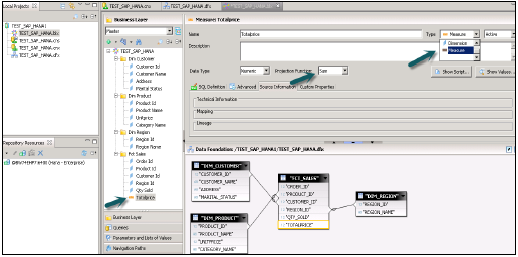

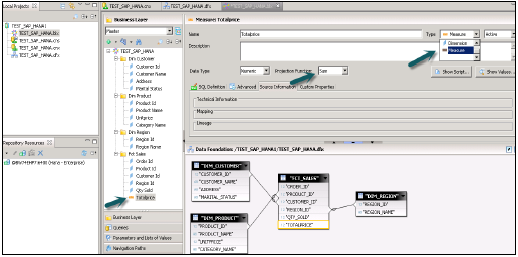

Right Click on .dfx and choose new Business Layer → Enter Name → Finish →. It will show all the objects automatically, under master pane →. Change Dimension to Measures (Type-Measure change Projection as required) → Save All.

Right-click on .bfx file → click on Publish → To Repository → click on Next → Finish → Universe Published Successfully.

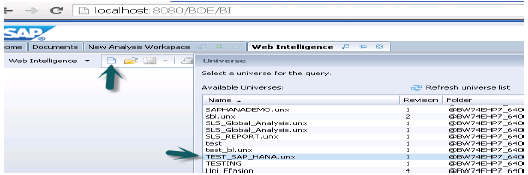

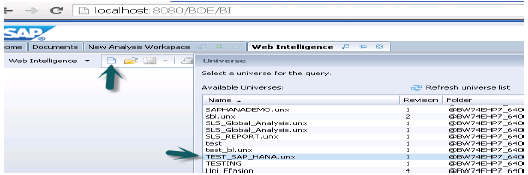

Now open WebI Report from BI Launchpad or Webi rich client from BI Platform client tools → New → select Universe → TEST_SAP_HANA → OK.

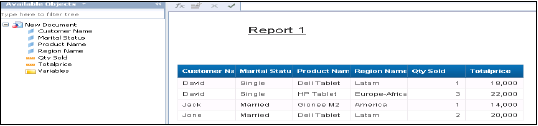

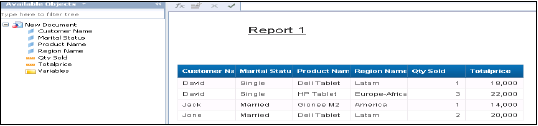

All Objects will be added to Query Panel. You can choose attributes and measures from left pane and add them to Result Objects. The Run query will run the SQL query and the output will be generated in the form of Report in WebI as shown below.

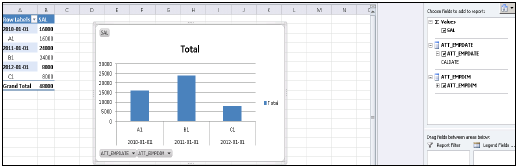

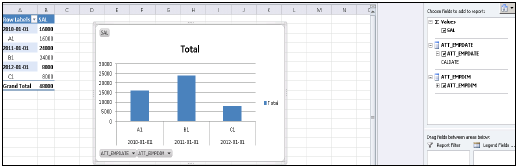

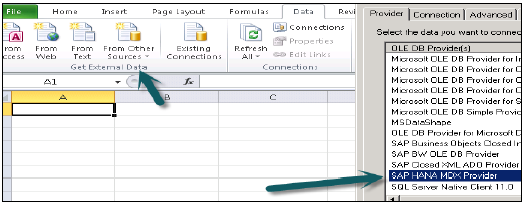

SAP HANA - Excel Integration

Microsoft Excel is considered the most common BI reporting and analysis tool by many organizations. Business Managers and Analysts can connect it to HANA database to draw Pivot tables and charts for analysis.

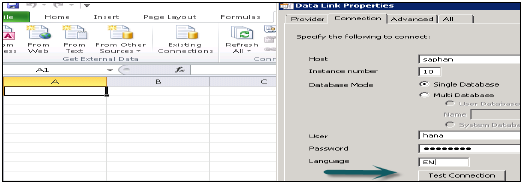

Connecting MS Excel to HANA

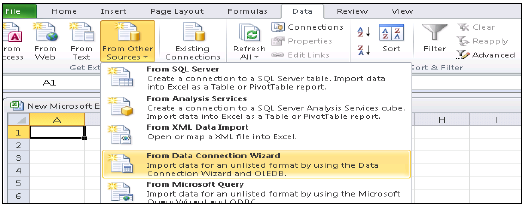

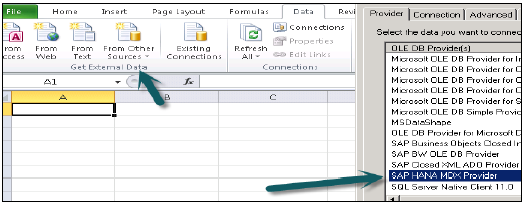

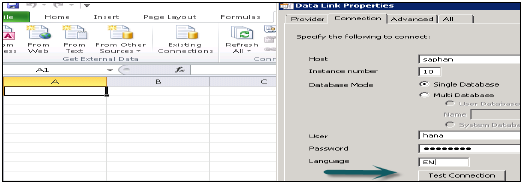

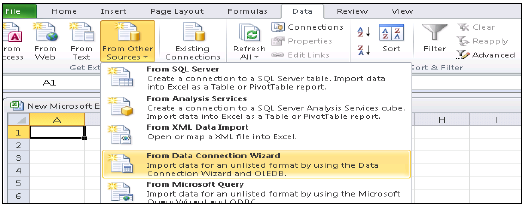

Open Excel and go to Data tab → from other sources → click on Data connection wizard → Other/ Advanced and click on Next → Data link properties will open.

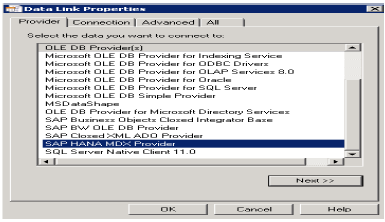

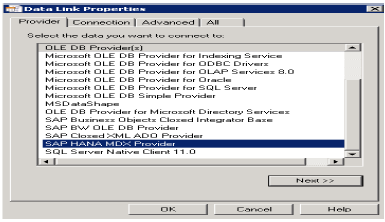

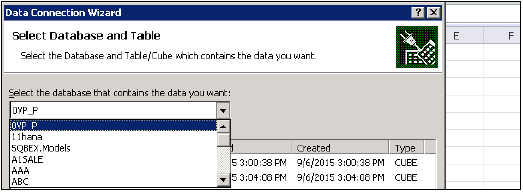

Choose SAP HANA MDX Provider from this list to connect to any MDX data source → Enter HANA system details (server name, instance, user name and password) → click on Test Connection → Connection succeeded → OK.

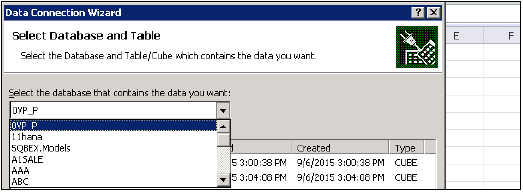

It will give you the list of all packages in drop down list that are available in HANA system. You can choose an Information view → click Next → Select Pivot table/others → OK.

All attributes from Information view will be added to MS Excel. You can choose different attributes and measures to report as shown and you can choose different charts like pie charts and bar charts from design option at the top.

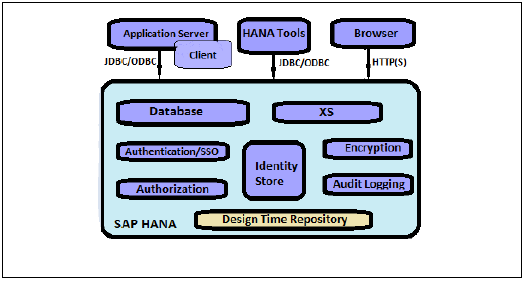

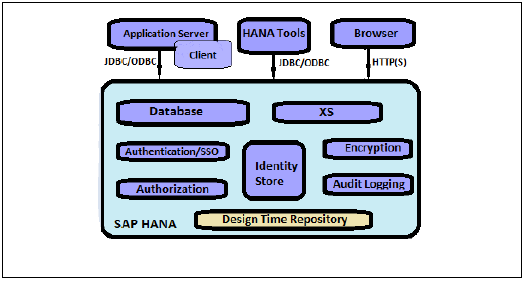

SAP HANA - Security Overview

Security means protecting company’s critical data from unauthorized access and use, and to ensure that Compliance and standards are met as per the company policy. SAP HANA enables customer to implement different security policies and procedures and to meet compliance requirements of the company.

SAP HANA supports multiple databases in a single HANA system and this is known as multitenant database containers. HANA system can also contain more than one multitenant database containers. A multiple container system always has exactly one system database and any number of multitenant database containers. AN SAP HANA system that is installed in this environment is identified by a single system ID (SID). Database containers in HANA system are identified by a SID and database name. SAP HANA client, known as HANA studio, connects to specific databases.

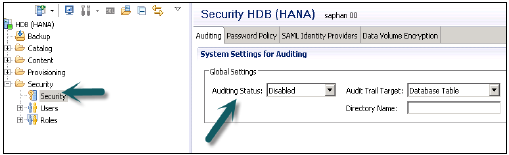

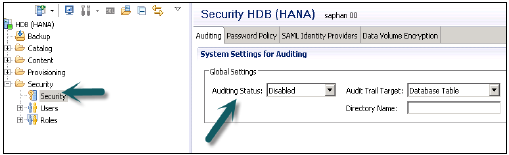

SAP HANA provides all security related features such as Authentication, Authorization, Encryption and Auditing, and some add on features, which are not supported in other multitenant databases.

Below given is a list of security related features, provided by SAP HANA −

- User and Role Management

- Authentication and SSO

- Authorization

- Encryption of data communication in Network

- Encryption of data in Persistence Layer

Additional Features in multitenant HANA database −

Database Isolation − It involves preventing cross tenant attacks through operating system mechanism

Configuration Change blacklist − It involves preventing certain system properties from being changed by tenant database administrators

Restricted Features − It involves disabling certain database features that provides direct access to file system, the network or other resources.

SAP HANA User and Role Management

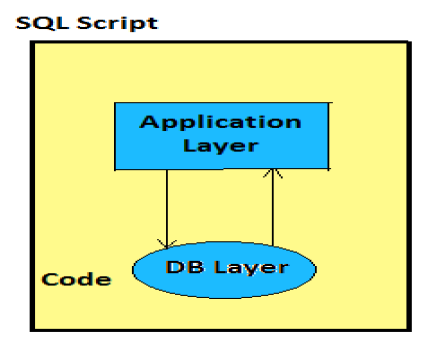

SAP HANA user and role management configuration depends on the architecture of your HANA system.

If SAP HANA is integrated with BI platform tools and acts as reporting database, then the end-user and role are managed in application server.

If the end-user directly connects to the SAP HANA database, then user and role in database layer of HANA system is required for both end users and administrators.

Every user wants to work with HANA database must have a database user with necessary privileges. User accessing HANA system can either be a technical user or an end user depending on the access requirement. After successful logon to system, user’s authorization to perform the required operation is verified. Executing that operation depends on privileges that user has been granted. These privileges can be granted using roles in HANA Security. HANA Studio is one of powerful tool to manage user and roles for HANA database system.

User Types

User types vary according to security policies and different privileges assigned on user profile. User type can be a technical database user or end user needs access on HANA system for reporting purpose or for data manipulation.

Standard Users

Standard users are users who can create objects in their own Schemas and have read access in system Information models. Read access is provided by PUBLIC role which is assigned to every standard users.

Restricted Users

Restricted users are those users who access HANA system with some applications and they do not have SQL privileges on HANA system. When these users are created, they do not have any access initially.

If we compare restricted users with Standard users −

Restricted users cannot create objects in HANA database or their own Schemas.

They do not have access to view any data in database as they don’t have generic Public role added to profile like standard users.

They can connect to HANA database only using HTTP/HTTPS.

User Administration & Role Management

Technical database users are used only for administrative purpose such as creating new objects in database, assigning privileges to other users, on packages, applications etc.

SAP HANA User Administration Activities

Depending on business needs and configuration of HANA system, there are different user activities that can be performed using user administration tool like HANA studio.

Most common activities include −

- Create Users

- Grant roles to users

- Define and Create Roles

- Deleting Users

- Resetting user passwords

- Reactivating users after too many failed logon attempts

- Deactivating users when it is required

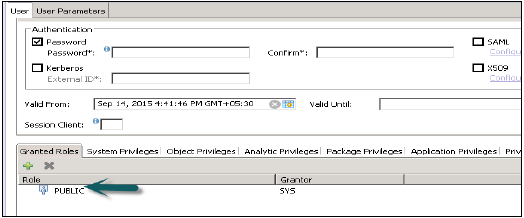

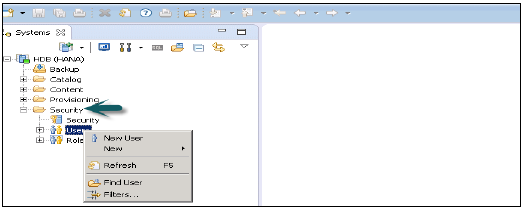

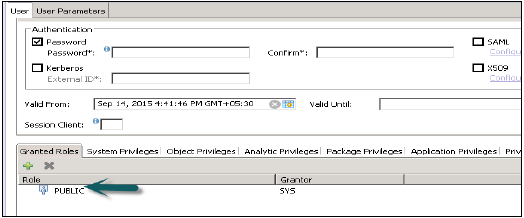

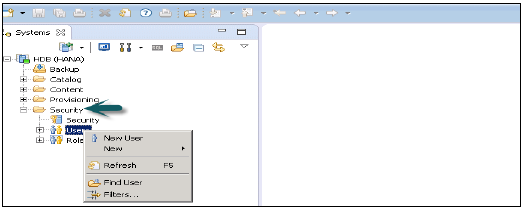

How to create Users in HANA Studio?

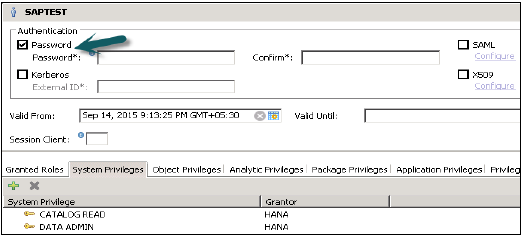

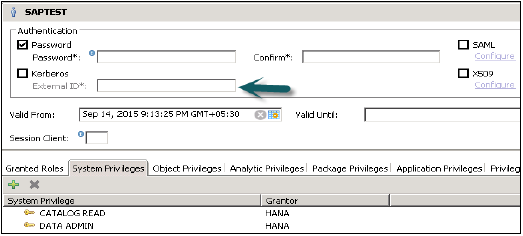

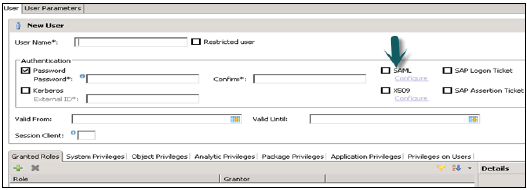

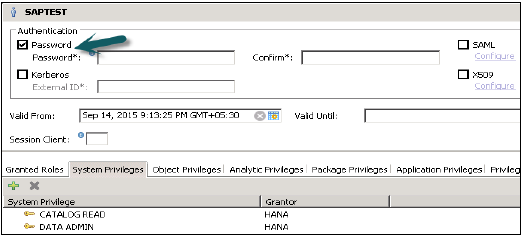

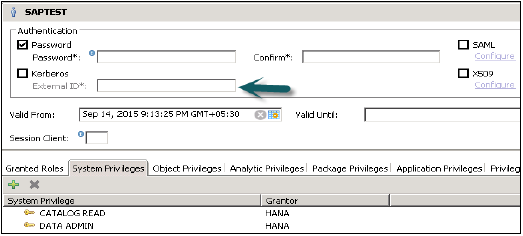

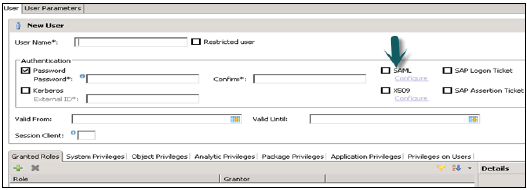

Only database users with the system privilege ROLE ADMIN are allowed to create users and roles in HANA studio. To create users and roles in HANA studio, go to HANA Administrator Console. You will see security tab in System view −

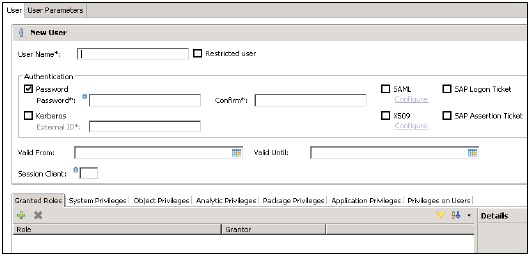

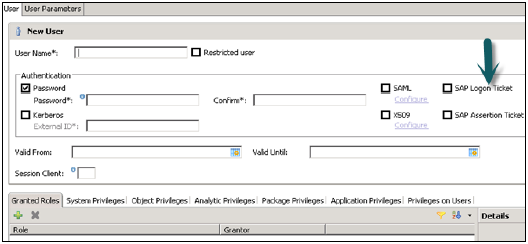

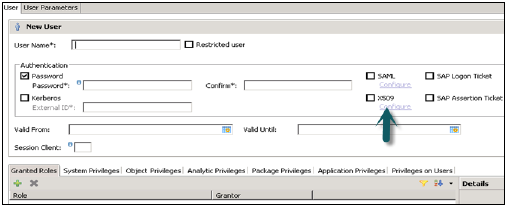

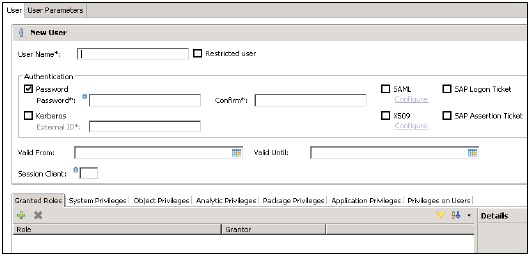

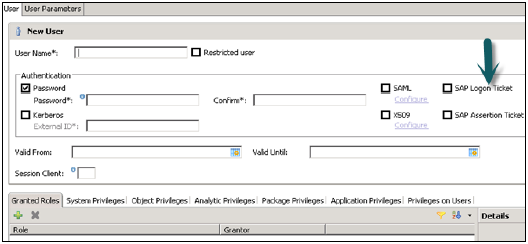

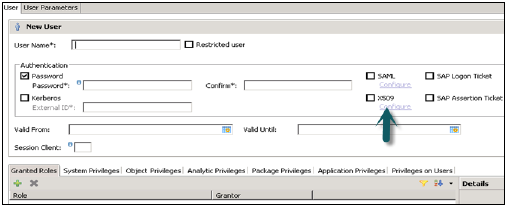

When you expand security tab, it gives option of User and Roles. To create a new user right click on User and go to New User. New window will open where you define User and User parameters.

Enter User name (mandate) and in Authentication field enter password. Password is applied, while saving password for a new user. You can also choose to create a restricted user.

The specified role name must not be identical to the name of an existing user or role. The password rules include a minimal password length and a definition of which character types (lower, upper, digit, special characters) have to be part of the password.

Different Authorization methods can be configured like SAML, X509 certificates, SAP Logon ticket, etc. Users in the database can be authenticated by varying mechanisms −

Internal authentication mechanism using a password.

External mechanisms such as Kerberos, SAML, SAP Logon Ticket, SAP Assertion Ticket or X.509.

A user can be authenticated by more than one mechanism at a time. However, only one password and one principal name for Kerberos can be valid at any one time. One authentication mechanism has to be specified to allow the user to connect and work with the database instance.

It also gives an option to define validity of user, you can mention validity interval by selecting the dates. Validity specification is an optional user parameter.

Some users that are, by default, delivered with the SAP HANA database are − SYS, SYSTEM, _SYS_REPO, _SYS_STATISTICS.

Once this is done, the next step is to define privileges for user profile. There are different types of privileges that can be added to a user profile.

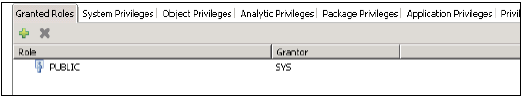

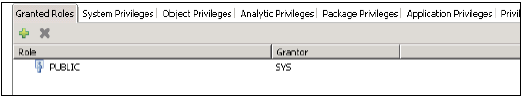

Granted Roles to a User

This is used to add inbuilt SAP.HANA roles to user profile or to add custom roles created under Roles tab. Custom roles allow you to define roles as per access requirement and you can add these roles directly to user profile. This removes need to remember and add objects to a user profile every time for different access types.

PUBLIC − This is Generic role and is assigned to all database users by default. This role contains read only access to system views and execute privileges for some procedures. These roles cannot be revoked.

Modeling

It contains all privileges required for using the information modeler in the SAP HANA studio.

System Privileges

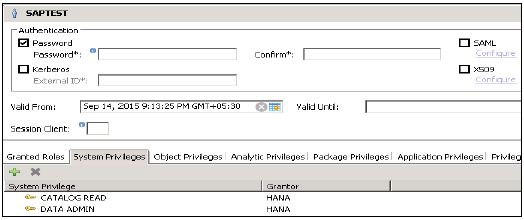

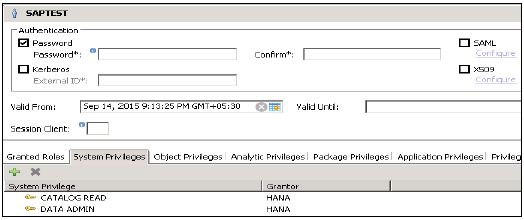

There are different types of System privileges that can be added to a user profile. To add a system privileges to a user profile, click on + sign.

System privileges are used for Backup/Restore, User Administration, Instance start and stop, etc.

Content Admin

It contains the similar privileges as that in MODELING role, but with the addition that this role is allowed to grant these privileges to other users. It also contains the repository privileges to work with imported objects.

Data Admin

This is a type of privilege, required for adding Data from objects to user profile.

Given below are common supported System Privileges −

Attach Debugger

It authorizes the debugging of a procedure call, called by a different user. Additionally, the DEBUG privilege for the corresponding procedure is needed.

Audit Admin

Controls the execution of the following auditing-related commands − CREATE AUDIT POLICY, DROP AUDIT POLICY and ALTER AUDIT POLICY and the changes of the auditing configuration. Also allows access to AUDIT_LOG system view.

Audit Operator

It authorizes the execution of the following command − ALTER SYSTEM CLEAR AUDIT LOG. Also allows access to AUDIT_LOG system view.

Backup Admin

It authorizes BACKUP and RECOVERY commands for defining and initiating backup and recovery procedures.

Backup Operator

It authorizes the BACKUP command to initiate a backup process.

Catalog Read

It authorizes users to have unfiltered read-only access to all system views. Normally, the content of these views is filtered based on the privileges of the accessing user.

Create Schema

It authorizes the creation of database schemas using the CREATE SCHEMA command. By default, each user owns one schema, with this privilege the user is allowed to create additional schemas.

CREATE STRUCTURED PRIVILEGE

It authorizes the creation of Structured Privileges (Analytical Privileges). Only the owner of an Analytical Privilege can further grant or revoke that privilege to other users or roles.

Credential Admin

It authorizes the credential commands − CREATE/ALTER/DROP CREDENTIAL.

Data Admin

It authorizes reading all data in the system views. It also enables execution of any Data Definition Language (DDL) commands in the SAP HANA database

A user having this privilege cannot select or change data stored tables for which they do not have access privileges, but they can drop tables or modify table definitions.

Database Admin

It authorizes all commands related to databases in a multi-database, such as CREATE, DROP, ALTER, RENAME, BACKUP, RECOVERY.

Export

It authorizes export activity in the database via the EXPORT TABLE command.

Note that beside this privilege the user requires the SELECT privilege on the source tables to be exported.

Import

It authorizes the import activity in the database using the IMPORT commands.

Note that beside this privilege the user requires the INSERT privilege on the target tables to be imported.

Inifile Admin

It authorizes changing of system settings.

License Admin

It authorizes the SET SYSTEM LICENSE command install a new license.

Log Admin

It authorizes the ALTER SYSTEM LOGGING [ON|OFF] commands to enable or disable the log flush mechanism.

Monitor Admin

It authorizes the ALTER SYSTEM commands for EVENTs.

Optimizer Admin

It authorizes the ALTER SYSTEM commands concerning SQL PLAN CACHE and ALTER SYSTEM UPDATE STATISTICS commands, which influence the behavior of the query optimizer.

Resource Admin

This privilege authorizes commands concerning system resources. For example, ALTER SYSTEM RECLAIM DATAVOLUME and ALTER SYSTEM RESET MONITORING VIEW. It also authorizes many of the commands available in the Management Console.

Role Admin

This privilege authorizes the creation and deletion of roles using the CREATE ROLE and DROP ROLE commands. It also authorizes the granting and revocation of roles using the GRANT and REVOKE commands.

Activated roles, meaning roles whose creator is the pre-defined user _SYS_REPO, can neither be granted to other roles or users nor dropped directly. Not even users having ROLE ADMIN privilege are able to do so. Please check documentation concerning activated objects.

Savepoint Admin

It authorizes the execution of a savepoint process using the ALTER SYSTEM SAVEPOINT command.

Components of the SAP HANA database can create new system privileges. These privileges use the component-name as first identifier of the system privilege and the component-privilege-name as the second identifier.

Object/SQL Privileges

Object privileges are also known as SQL privileges. These privileges are used to allow access on objects like Select, Insert, Update and Delete of tables, Views or Schemas.

Given below are possible types of Object Privileges −

Object privilege on database objects that exist only in runtime

Object privilege on activated objects created in the repository, like calculation views

Object privilege on schema containing activated objects created in the repository,

Object/SQL Privileges are collection of all DDL and DML privileges on database objects.

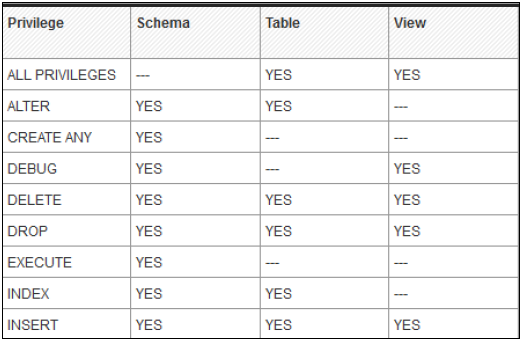

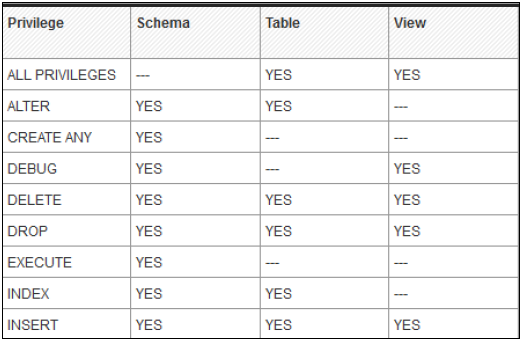

Given below are common supported Object Privileges −

There are multiple database objects in HANA database, so not all the privileges are applicable to all kinds of database objects.

Object Privileges and their applicability on database objects −

Analytic Privileges

Sometimes, it is required that data in the same view should not be accessible to other users who does not have any relevant requirement for that data.

Analytic privileges are used to limit the access on HANA Information Views at object level. We can apply row and column level security in Analytic Privileges.

Analytic Privileges are used for −

- Allocation of row and column level security for specific value range.

- Allocation of row and column level security for modeling views.

Package Privileges

In the SAP HANA repository, you can set package authorizations for a specific user or for a role. Package privileges are used to allow access to data models- Analytic or Calculation views or on to Repository objects. All privileges that are assigned to a repository package are assigned to all sub packages too. You can also mention if assigned user authorizations can be passed to other users.

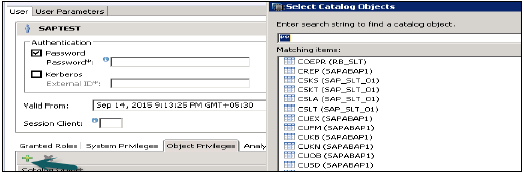

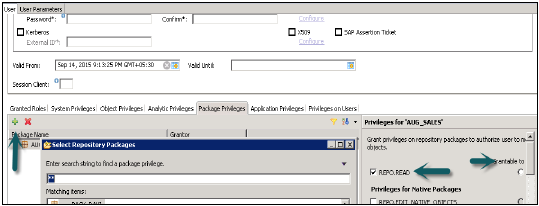

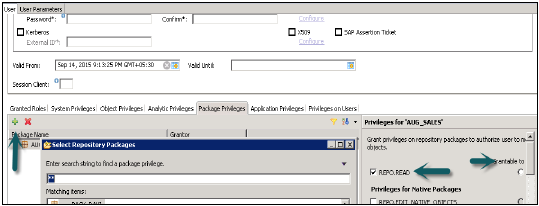

Steps to add a package privileges to User profile −

Click on Package privilege tab in HANA studio under User creation → Choose + to add one or more packages. Use Ctrl key to select multiple packages.

In the Select Repository Package dialog, use all or part of the package name to locate the repository package that you want to authorize access to.

Select one or more repository packages that you want to authorize access to, the selected packages appear in the Package Privileges tab.

Given below are grant privileges, which are used on repository packages to authorize user to modify the objects −

REPO.READ − Read access to the selected package and design-time objects (both native and imported)

REPO.EDIT_NATIVE_OBJECTS − Authorization to modify objects in packages.

Grantable to Others − If you choose ‘Yes’ for this, this allows assigned user authorization to pass to the other users.

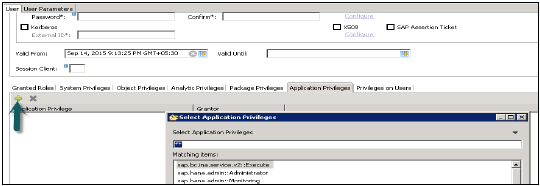

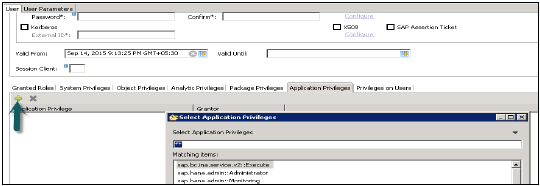

Application Privileges

Application privileges in a user profile are used to define authorization for access to HANA XS application. This can be assigned to an individual user or to the group of users. Application privileges can also be used to provide different level of access to the same application like to provide advanced functions for database Administrators and read-only access to normal users.

To define Application specific privileges in a user profile or to add group of users, below privileges should be used −

- Application-privileges file (.xsprivileges)

- Application-access file (.xsaccess)

- Role-definition file (<RoleName>.hdbrole)

SAP HANA - Authentications

All SAP HANA users that have access on HANA database are verified with different Authentications method. SAP HANA system supports various types of authentication method and all these login methods are configured at time of profile creation.

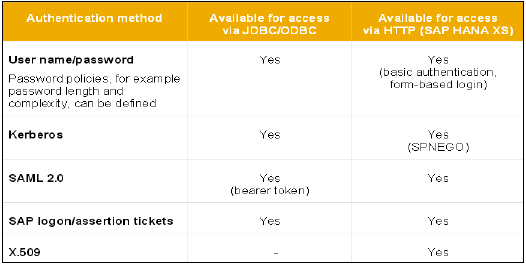

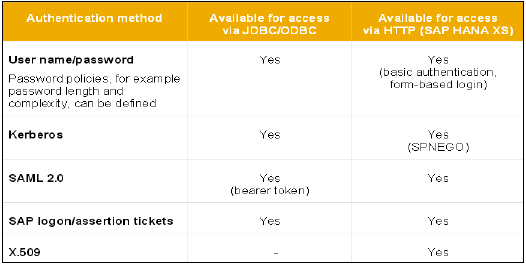

Below is the list of authentication methods supported by SAP HANA −

- User name/Password

- Kerberos

- SAML 2.0

- SAP Logon tickets

- X.509

User Name/Password

This method requires a HANA user to enter user name and password to login to database. This user profile is created under User management in HANA Studio → Security Tab.

Password should be as per password policy i.e. Password length, complexity, lower and upper case letters, etc.

You can change the password policy as per your organization’s security standards. Please note that password policy cannot be deactivated.

Kerberos